Schrödinger's Backup: "The condition of any backup is unknown until a restore is attempted"

So you're backing up your databases, but are you regularly checking that the backups are actually useable? Uphold will help you automatically test them by downloading the backup, decompressing, loading and then running programmatic tests against it that you define to make sure they really have what you need.

- Preface

- Prerequisites

- How does it work?

- Installation

- Configuration

- Running

- Scheduling

- API

- Automation Tool Support (Jenkins, TeamCity, etc)

- Development

This project is very new and subsequently very beta so contributions and pulls are very much welcomed. We have a TODO file with things that we know about that would be awesome if worked on.

- Backups

- Docker (>= v1.3.*) with the ability to talk to the Docker API

In order to make the processes are repeatable as possible all the code and databases are run inside single process Docker containers. There are currently three types of container, the ui, the tester and the database itself. Each triggers the next...

uphold-ui

\

-> uphold-tester

\

-> engine-container

This way each time the process is run, the containers are fresh and new, they hold no state. So each time the database is imported into a cold database.

The output of each process run is a log and a state file and these are stored in /var/log/uphold by default. The UI reads these files to display the state of the runs occurring, no other state is stored in the system.

/var/log/uphold

/var/log/uphold/1453489253_my_db_backup.log

/var/log/uphold/1453489253_my_db_backup_ok

This is the output of a backup run for 'my_db_backup' that was started at 1453489253 unix epoch time. The log file contains the full output of the run, and the state file is an empty file, it's name shows the status of the run...

okBackup was declared good, was transported, loaded and tested successfullyok_no_testBackup was successfully transported and loaded into the DB, but there were no tests to runbad_transportTransport failedbad_engineContainer did not open it's port in a timely mannerbad_testsAt least one of the programmatic tests failedbadAn error occurred either in transport or loading into the db engine

Logs are not automatically rotated or removed, it is left up to you to decide how long you want to keep them. Once they become compressed, they will disappear from the UI. The same goes for the exited Docker containers of 'uphold-tester', they are left on the system incase you wish to inspect them. The database containers however are wiped after they are used.

Most of the installation goes around configuring the tool, you must create the following directory structure on the machine you want to run Uphold on...

/etc/uphold/

/etc/uphold/conf.d/

/etc/uphold/engines/

/etc/uphold/transports/

/etc/uphold/tests/

/var/log/uphold

Create a global config in /etc/uphold/uphold.yml (even if you leave it empty), the settings inside are...

log_level(default:DEBUG)- You can decrease the verbosity of the logging by changing this to

INFO, but not recommended

- You can decrease the verbosity of the logging by changing this to

backup_tmp_path(default:/etc/uphold/tmp)- If you need to override this you can, used for when eg: S3 backups are downloaded onto the HOST machine so it can be mounted in the engine.

config_path(default:/etc/uphold)- Generally only overridden in development on OSX when you need to mount your own src directory

docker_log_path(default:/var/log/uphold)- Generally only overridden in development on OSX when you need to mount your own src directory

docker_url(default:unix:///var/run/docker.sock)- If you connect to Docker via a TCP socket instead of a Unix one, then you would supply

tcp://example.com:5422instead (untested)

- If you connect to Docker via a TCP socket instead of a Unix one, then you would supply

docker_container(default:vjeeva/uphold-tester)- If you need to customize the docker container and use a different one, you can override it here

docker_tag(default:latest)- Can override the Docker container tag if you want to run from a specific version

docker_mounts(default:none)- If your backups exist on the host machine and you want to use the

localtransport, the folders they exist in need to be mounted into the container. You can specify them here as a YAML array of directories. They will be mounted at the same location inside the container

- If your backups exist on the host machine and you want to use the

ui_datetime(default:%F %T %Z)- Overrides the strftime used by the UI to display the outcomes, useful if you want to make it smaller or add info

If you change the global config you will need to restart the UI docker container, as some settings are only read at launch time.

log_level: DEBUG

backup_tmp_path: /etc/uphold/tmp

config_path: /etc/uphold

docker_log_path: /var/log/uphold

docker_url: unix:///var/run/docker.sock

docker_container: vjeeva/uphold-tester

docker_tag: latest

docker_mounts:

- /var/my_backups

- /var/my_other_backups

Each config is in YAML format, and is constructed of a transport, an engine and tests. In your /etc/uphold/conf.d directory simply create as many YAML files as you need, one per backup. Configs in this directory are re-read, so you don't need to restart the UI container if you add new ones.

enabled: true

name: s3-mongo

engine:

type: mongodb

settings:

timeout: 10

database: your_db_name

transport:

type: s3

settings:

region: us-west-2

access_key_id: your-access-key-id

secret_access_key: your-secret-access-key

bucket: your-backups

path: mongodb/systemx/{date0}

filename: mongodb_{date1}.tar

dates:

- date_format: '%Y.%m.%d'

- date_format: '%Y%m%d'

# ... add as many as needed

folder_within: mongodb/databases/MongoDB

tests:

- test_structure.rb

- test_data_integrity.rb

trigger:

type: local

logs:

type: local

enabledtrueorfalse, allows you to disable a config if needs be

name- Just so that if it's referenced anywhere, you have a nicer name

See the sections below for how to configure Engines, Transports and Tests.

Transports are how you retrieve the backup file itself. They are also responsible for decompressing the file, the code supports nested compression (compressed files within compressed files). Currently implemented transports are...

- S3

- Local file

Custom transports can also be loaded at runtime if they are placed in /etc/uphold/transports. If you need extra rubygems installed you will need to create a new Dockerfile with the base set to uphold-tester and then override the Gemfile and re-bundle. Then adjust your uphold.yml to use your new container.

Transports all inherit these generic parameters...

path- This is the path to the folder that the backup is inside, if it contains a date replace it with

{date}, eg./var/backups/2016-01-21would be/var/backups/{date}

- This is the path to the folder that the backup is inside, if it contains a date replace it with

filename- The filename of the backup file, if it contains a date replace it with

{date}, eg.mongodb-2016-01-21.tarwould bemongodb-{date}.tar

- The filename of the backup file, if it contains a date replace it with

date_format(default:%Y-%m-%d)- If your filename or path contains a date, supply it's format here

date_offset(default:0)- When using dates the code starts at

Date.todayand then subtracts this number, so for checking a backup that exists for yesterday, you would enter1

- When using dates the code starts at

folder_within- Once your backup has been decompressed it may have folders inside, if so, you need to provide where the last directory is, this generally can't be programmatically found as some database backups may contain folders in their own structure.

compressed- Specify if your path leads to an compressed archive with a boolean value (

trueorfalse).

- Specify if your path leads to an compressed archive with a boolean value (

The S3 transport allows you to pull your backup files from a bucket in S3. It has it's own extra settings...

region- Provide the region that your S3 bucket resides in (eg.

us-west-2)

- Provide the region that your S3 bucket resides in (eg.

access_key_id- AWS access key that has privileges to read from the specified bucket

secret_access_key- AWS secret access key that has privileges to read from the specified bucket

Paths do not need to be complete with S3, as it provides globbing capability. So if you had a path like this...

my-service-backups/mongodb/2016.01.21.00.36.03/mongodb.tar

Theres no realistic way for us to re-create that date, so you would do this instead...

path: my-service-backups/mongodb/{date}

filename: mongodb.tar

date_format: '%Y.%m.%d'

As the path is sent to the S3 API as a prefix, it will match all folders, the code then picks the first one it matches correctly. So be aware that not being specific enough with the date_format could cause the wrong backup to be tested.

transport:

type: s3

settings:

region: us-west-2

access_key_id: your-access-key-id

secret_access_key: your-secret-access-key

bucket: your-backups

path: mongodb/systemx/{date}

filename: mongodb.tar

date_format: '%Y.%m.%d'

folder_within: mongodb/databases/MongoDB

The local transport allows you to pull your backup files from the same machine that is running the Docker container. Be aware, since this code runs within a container you will need to add the volume that contains the backup when starting up. We auto-mount /var/uphold to the same place within the container to reduce confusion.

It uses the generic ones, filename, path and folder_within, there is one extra setup. When mounting the UI container, you must mount the top level folder of the backup location (above what you set as path) as a mount with the format mount-#{transport-type}-#{name-of-config}. When the Tester container runs, for backups it will mount at the location specified in docker_mounts, in the same location in the container so your path will be unaffected due to the UI container's behaviour.

For instance, if backups are on the host machine in /home/user/YYYY/MM/ and the backups are named YYMMDD.dump, and your config is named local-DB, then the following will need to be set. The conf.d YAML file will need its path to be /home/user/{date0}, and the filename to be {date1}.dump. The UI container will need the mount -v /:/mount-local-local-DB and the uphold.yml will need to specify in docker_mounts: /home/user. The mount will be read-only.

transport:

type: local

settings:

path: /var/uphold/mongodb

filename: mongodb.tar

folder_within: mongodb/databases/MongoDB

Engines are used to load the backup that was retrieved by the transport into the database. Databases are started inside fresh docker containers each time so no installation is required. Currently supported databases are...

- MongoDB

- MySQL

- PostgreSQL

Custom engines can also be loaded at runtime if they are placed in /etc/uphold/engines

Engines all inherit these generic parameters, but are usually significantly easier to configure when compared to transports...

type- The name of the engine class you want to use (eg.

mongodb)

- The name of the engine class you want to use (eg.

database- The name of the database you want to recover, as your backup may contain multiple

port- The port number that the database will run on (engine will provide a sane default)

docker_image- The name of the Docker container (engine will provide a sane default)

docker_tag- The tag of the Docker container (engine will provide a sane default)

timeout(default:10)- The number of seconds you will give the container to respond on it's TCP port. You may need to increase this if you start many backup tests at the same time.

Unless you need to change any of the defaults, a standard configuration for MongoDB will look quite small.

engine:

type: mongodb

settings:

database: your_db_name

engine:

type: mongodb

settings:

database: your_db_name

docker_image: mongo

docker_tag: 3.2.1

port: 27017

engine:

type: mysql

settings:

database: your_database_name

sql_file: your_sql_file.sql

engine:

type: mysql

settings:

database: your_database_name

docker_image: mariadb

docker_tag: 5.5.42

port: 3306

sql_file: MySQL.sql

engine:

type: postgresql

settings:

database: your_database_name

sql_file: your_sql_file.sql

You can specify the username for accessing the postgres server (other than the default postgres) for when you run the tests. Furthermore, you can specify the file type. Since the file type can be anything, the restore_type must be set to know which command to restore with. If psql, then the restore will be done with the psql command, which takes a .sql file. If pg_restore, then the restore will be done with the pg_restore command, which takes a dump file.

engine:

type: postgresql

settings:

database: your_database_name

docker_image: postgres

docker_tag: 9.5.0

extension: .sql

restore_type: psql

port: 5432

sql_file: PostgreSQL.sql

username: postgres

cores: 16

command_timeout: 7200 # Seconds is taken here

Tests are the final step in configuration. They are how you validate that the data contained within your backup is really what you want, and that your backup is operating correctly. Tests are written in Ruby using Minitest, this gives you the most flexibility in writing tests programmatically as it supports both Unit & Spec tests. To configure a test you simply provide an array of ruby files you want to run...

tests:

- test_structure.rb

- test_data_integrity.rb

Tests should be placed within the /etc/uphold/tests directory, all files inside will be volume mounted into the container so if you need extra files they are available to you.

We need to establish a connection to the database, and the values will not be known in advance. So they will be provided to you by environmental variables UPHOLD_IP, UPHOLD_PORT and UPHOLD_DB. You must use these when connecting to your database.

require 'minitest/autorun'

require 'mongoid'

class TestClients < Minitest::Test

Mongo::Logger.logger.level = Logger::FATAL

@@mongo = Mongo::Client.new("mongodb://#{ENV['UPHOLD_IP']}:#{ENV['UPHOLD_PORT']}/#{ENV['UPHOLD_DB']}")

def test_that_we_can_talk_to_mongo

assert_equal 1, @@mongo.collections.count

end

end

Obviously this is just a simple test, but you can write any number of tests you like. All must pass in order for the backup to be considered 'good'.

This setting determines if in the UI, when one runs a build, would it be run locally or dispatched to an outside URL? Referring to Automation Tool Support, we can either run backups locally by dispatching a container from within the UI container (assuming the entire system runs on a single host machine), or we can trigger a build via URL for tools like Jenkins or TeamCity.

If wanting to trigger locally, the type just needs to be set to local and no other parameter is needed. If wanting to trigger an external build, we change the type to external, then the url to your url. This is set in your conf.d config YAML.

NOTE: If trigger is unset, default will be local.

Example (local):

trigger: type: local

Example (external):

trigger: type: external settings: url: https://url-here.com/api/something?something

This setting determines the log dump/retrieval location. Again referring to Automation Tool Support](#automationtoolsupport), we can either dump logs locally or to a remote location (eg: S3). Using the transport drivers supported, we can set this in the GLOBAL uphold YAML, /etc/uphold/uphold.yml.

If you want your logs dumped locally, the location just needs to be local. Otherwise, location just needs to be s3, same name as the transport class. Further parameters depends on the driver.

NOTE: If logs is unset, default will be local and in /var/log/uphold. Also, if the log location is NOT /var/log/uphold, then your run command for the UI container MUST change!

Example (local):

logs: location: local settings: path: /var/log/uphold

Example (s3):

logs: location: s3 settings: region: us-west-2 access_key_id: your-access-key-id secret_access_key: your-secret-access-key bucket: your-backup-logs path: some/folders # Note this is optional

Once you have finished your configuration, to get the system running you only need to start the Docker container called 'uphold-ui'.

docker pull vjeeva/uphold-ui:latest

docker run \

--rm \

-p 8079:8079 \

-v /var/run/docker.sock:/var/run/docker.sock \

-v /etc/uphold:/etc/uphold \

-v /var/log/uphold:/var/log/uphold \

vjeeva/uphold-ui:latest

- You must make sure that you mount in the

docker.sockfrom wherever it resides. - Feel free to change the port number if you don't want it to start up on port 8079.

- Mount in the config and log directories as otherwise it can't read your configuration.

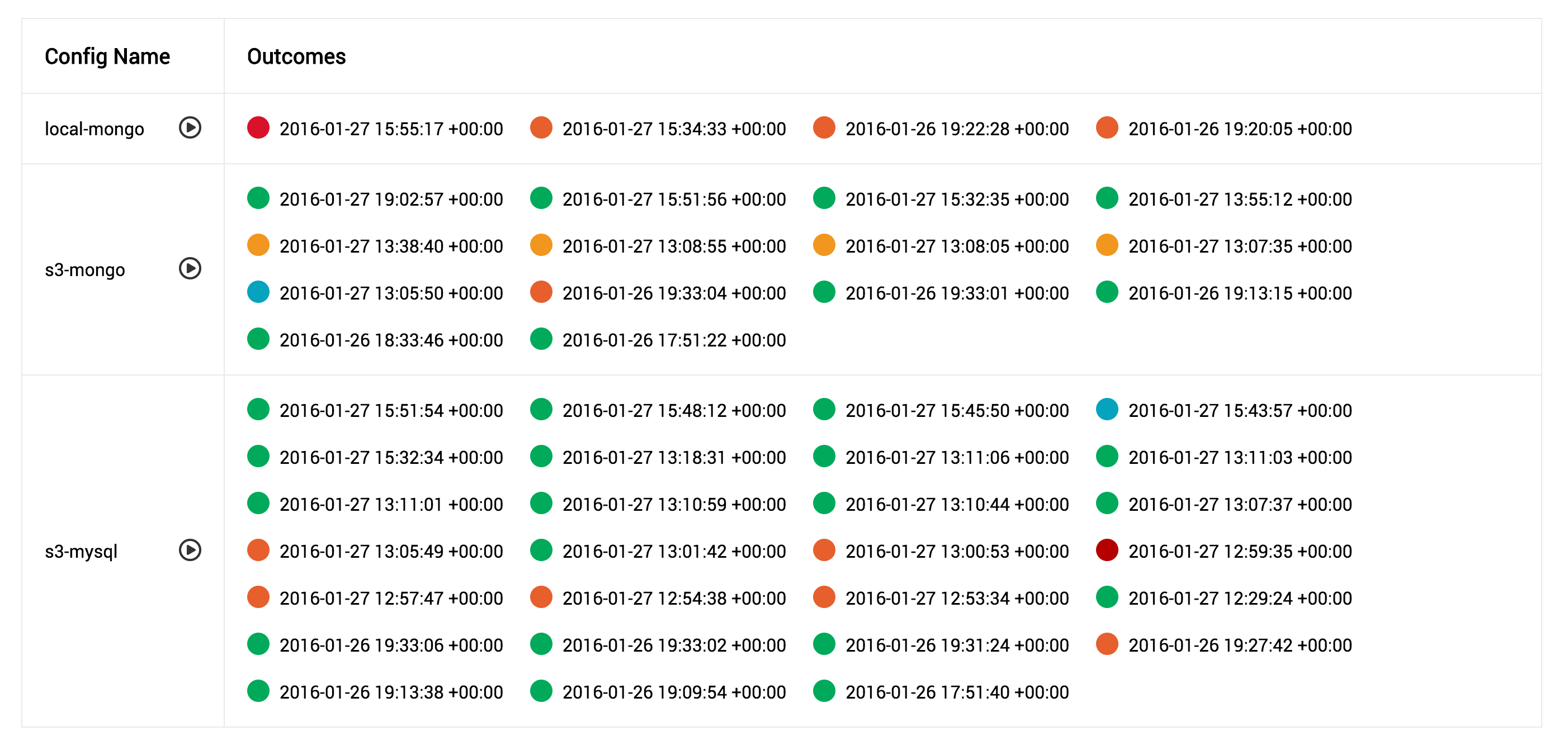

Once the container is live you can browse to it, see all previous available runs for a backup and the states the ended in. You can manually start a backup test from here if you want to.

No option to schedule backup runs exists at present. Until one exists you can use the API to trigger backup runs to start. This way you can schedule however you like, crontab, notifier or any other service capable of sending a POST.

This will return all the available backup runs for the config name provided in JSON format...

[

{

"epoch": 1453921377,

"state": "ok",

"filename": "1453921377_s3-mongo.log"

},

{

"epoch": 1453909916,

"state": "ok",

"filename": "1453909916_s3-mongo.log"

}

]

This will return a plain text string of the state of the last backup run for the config name provided. If no runs were available, it will return none

You must pass the name of the config you want to trigger in a form field called name. It will then start the run and return 200. An example of how to trigger a backup run for the config named s3-mongo...

curl --data "name=s3-mongo" http://ip.of.your.container/api/1.0/backup

If one wants to have backups verified automatically using a tool like Jenkins or TeamCity, this will be fairly painless! As mentioned above in the architecture of this framework, the UI container spawns the Tester container and it will connect to a DB engine container to load a backup and run tests. The global uphold.yml is used by the UI container to know how to create the Tester container, but if using an automation tool, the tool can set up the environment needed for the container to run. Therefore, for example, a TeamCity agent can spawn a container within a build and one can set up the environment in the build before running the tester container. For logs, this will force the tester container to dump logs to a remote place, which will be a flag in the config YAML.

To have your tool of choice run a tester container, it will need to do the following:

- Retrieve the relevant

conf.dYAML file for the backup being run - Retrieve timestamp for the backup that is being targeted (in Unix format)

- Copy the

conf.dYAML file into/etc/uphold/conf.d/on the agent machine - Create and run the tester container (if default, use

vjeeva/uphold-tester) with the local mount/etc/uphold(and/var/log/upholdif you plan to keep your logs on the agent only), and/etc/uphold/tmpunless specified differently in your global YAML for tmp backups, and any and all mounts indocker_mounts - Do the appropriate cleanup

To aid with development there is a helper script in the root directory called build_and_run and build_and_inspect which will build or inspect the Dockerfile and then run it using some default options. Since otherwise testing is a bit of a nightmare when trying to talk to containers on your local machine. Various folders from within the project directory will be auto-mounted into the container...

dev/uphold.yml->/etc/uphold/uphold.ymldev/conf.d->/etc/uphold/conf.ddev/tests->/etc/uphold/testsdev/custom/engines->/etc/uphold/enginesdev/custom/transports->/etc/uphold/transportsdev/blobs->/var/backups

Remember to place a uphold.yml config of your own in the dev/config directory.