This project has two main goals:

-

Mining from social media:

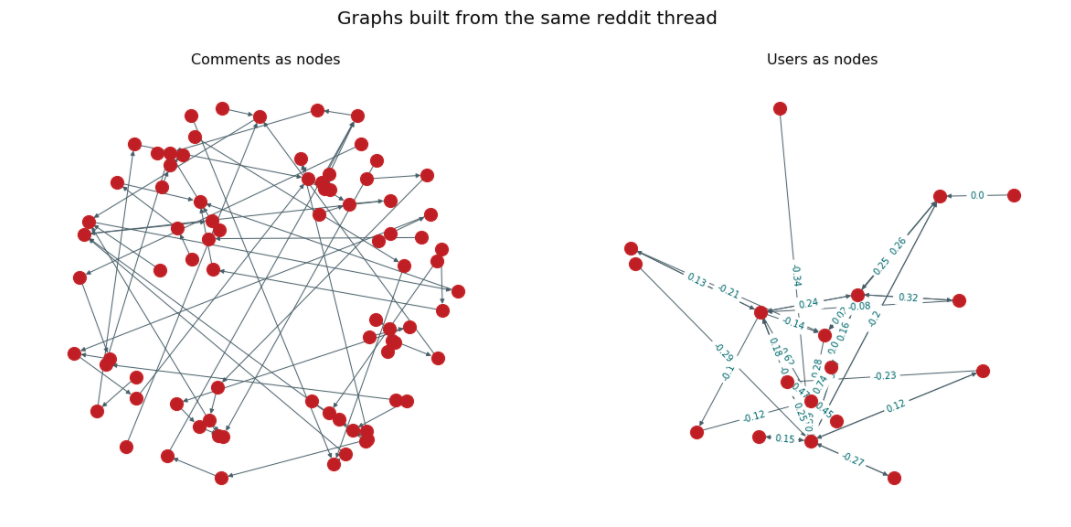

- Problem: mining arguments from online debates (considering comments as arguments) results in a tree

- Solution: treat users as nodes instead, basically starting from the comments tree and merging arguments with the same user

-

Generate eand rank extensions:

- Problem: find an hybrid approach between Extensional-Semantics and Ranking-Based Semantics

- Solution: compute subsets that satisfy an arbitrary semantic (preferred, conflict-free, ecc) and then rank these subsets by a function f: Subset -> N

The goals of argumentation are to identify, analyze and evaluate arguments. In particular, we give reasons to support or criticize a claim that is doubtful. In giving and evaluating reasons we engage in a reasoning process: we make connections between premises and conclusions.

One of the most influential computational models of argument was presented by Dung’s Argumentation Frameworks (AF), which is roughly a directed graph where the vertices are the abstract arguments and the directed edges correspond to attacks between them. The abstract nature of the arguments, and the relationship with non-monotonic reasoning formalisms, yield a very simple and quite general model that allows to easily understand which sets of arguments are mutually compatible. The precise conditions for their acceptance are defined by the semantics.

Semantics can be divided into two large families:

-

Extension-based Semantics: acceptable subsets of the arguments, called extensions, that correspond to various positions one may take based on the available arguments.

-

Ranking-based semantics: the other recent family of acceptability semantics has been defined recently, in which there are no acceptable subsets and arguments cannot only be accepted or rejected, but using a membership criterion, a qualitative acceptability degree is assigned to each argument.

The goal of this project is to propose an hybrid approach between the two families of semantics. Subsets within a semantics are all equally good, by ranking them, however, we try to impose an order relationship based on what we value most. Theis approach tries to rank the subsets generated by an extension-based semantics by using metrics of choice that can range from real-world domain metrics to more abstract graph-based metrics chosen according to the situation.

Two steps are needed to generate a ranking of the subsets:

- First we need to generate subsets of arguments by using one of the classical Extension-based Semantics

- Then we can compute a score for each subset (e.g. the closeness between the arguments inside the subset, or the similarity between the texts associated with each node inside the subset) and then rank them.

To do so the work is divided in two parts:

- Crawler: First of all we need the data, this is done by crawling social media conversations and creating the graph.

- Reasoner: Then subsets are generated by applying Extension-based Semantics and finally a score function is applied to each subset.

I suggest to setup a virtual environment using miniconda

-

Clone this repo:

git clone https://github.com/chrisPiemonte/argonaut.git

cd argonaut/

-

Put your credentials in

res/credentials.yml -

Create an environment with python 3.6:

conda create --name=argonaut_env python=3.6

-

Switch to that environment:

conda activate argonaut_env

-

Install requirements:

pip install -r requirements.txt

Now you can either:

- Check the notebooks by running:

jupyter lab

- Run the Argumentation Mining framework by running:

-

cd src

-

./run.sh

- you can edit the script to change the parameters (source, type of the output graph, type of argumentation framework,..)

-

-

Install SWI-Prolog

-

You need to put Arguer in

src/reasoner/arguer

- Run the the prolog module by:

swipl -s src/reasoner/argonaut

- inside the Prolog shell run the main menu predicate:

argonaut.

- Now you can:

- Load an Argumentation Framework:

1.

and then<path/to/file>.

- Generate and rank an extension of your choice:

2.

and then follow the menu

- Load an Argumentation Framework:

Read the full documentation on Overleaf !