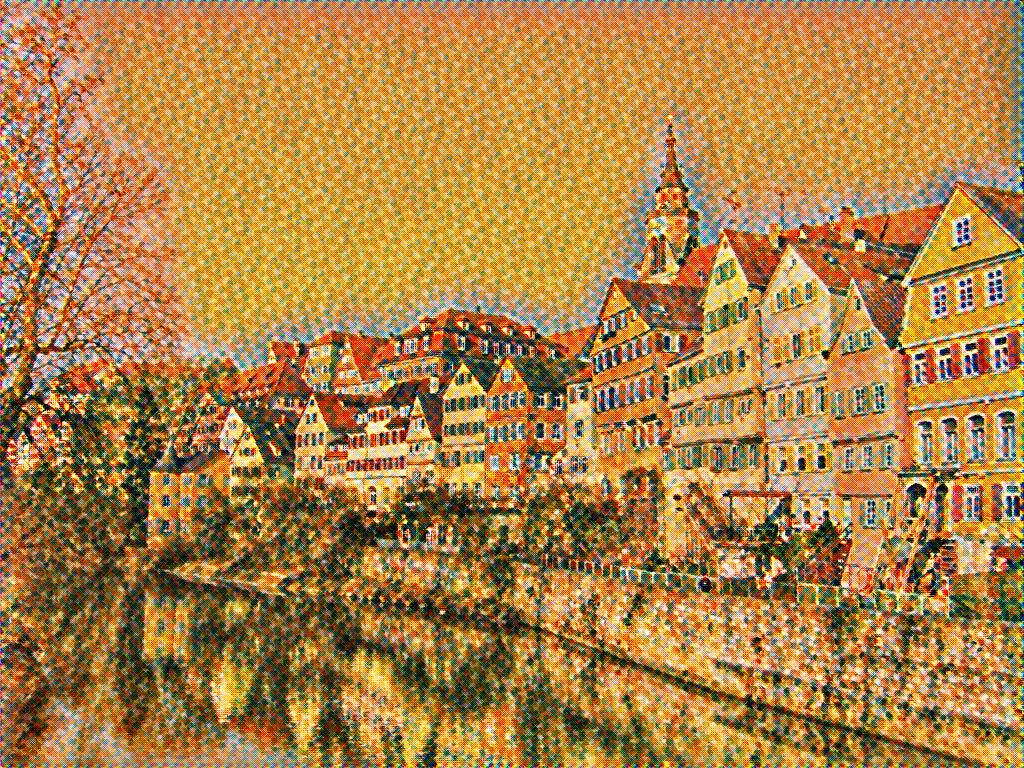

Fast artistic style transfer by using feed forward network.

- input image size: 1024x768

- process time(CPU): 17.78sec (Core i7-5930K)

- process time(GPU): 0.994sec (GPU TitanX)

- default

--image_sizeset to 512 (original uses 256). It's slow, but time is the price you have to pay for quality - ability to switch off dataset cropping with

--fullsizeoption. Crops by default to preserve aspect ratio - cropping implementation uses

ImageOps.fit, which always scales and crops, whereas original uses custom solution, which upscales the image if it's smaller than--image_size, otherwise just crops without scaling - bicubic and Lanczos resampling when scaling dataset and input style images respectively provides sharper shrinking, whereas original uses nearest neighbour

- Ability to specify multiple files for input to exclude model reloading every iteration. The format is standard Unix path expansion rules, like

file*orfile?.pngDon't forget to quote, otherwise the shell will expand it first. Saves about 0.5 sec on each image. - Output specifies path prefix if multiple files are used for input, otherwise an explicit filename

- Option

-xindicates content image scaling factor before transformation - Preserve original content colors with

--original_colorsflag. More info: Transfer style but not the colors

The repo includes a bash script to transform your videos. It depends on ffmpeg. Compilation instructions

./genvid.sh input_video output_video model start_time duration

The first three arguments are mandatory and should contain path to files.

The last two are optional and indicate starting position and duration in seconds.

I integrated Optical Flow implementation by @larspars to provide more consistent output for sequence of images by smoothing out the differences between frames. It requires opencv-python. Separate thanks to @genekogan for providing a thorough explanation of remarkably simple, yet efficient steps to put this together.

To use it, append the -flow option followed by amount of alpha blending like so

python generate.py 'frames/image*.png' -m models/any.model -o dir/prefix_ -flow 0.02

I find values between 0.02 and 0.05 to work best. It calculates motion vector between previous and current source frames, applies the distortion to previously transformed frame, overlays it on top of current source frame with -flow opacity and finally transforms it. This helps the network transformation to reveal the same features in current frame as were discovered in the previous. It only affects sequence of images so if there's a single image in the list, you won't see any difference.

$ pip install chainer

Download VGG16 model and convert it into smaller file so that we use only the convolutional layers which are 10% of the entire model.

sh setup_model.sh

Need to train one image transformation network model per one style target. According to the paper, the models are trained on the Microsoft COCO dataset.

python train.py -s <style_image_path> -d <training_dataset_path> -g 0

python generate.py <input_image_path> -m <model_path> -o <output_image_path>

This repo has pretrained models as an example.

- example:

python generate.py sample_images/tubingen.jpg -m models/composition.model -o sample_images/output.jpg

or

python generate.py sample_images/tubingen.jpg -m models/seurat.model -o sample_images/output.jpg

- Convolution kernel size 4 instead of 3.

- Training with batchsize(n>=2) causes unstable result.

This version is not compatible with the previous versions. You can't use models trained by the previous implementation. Sorry for the inconvenience!

MIT

Codes written in this repository based on following nice works, thanks to the author.

- chainer-gogh Chainer implementation of neural-style. I heavily referenced it.

- chainer-cifar10 Residual block implementation is referred.