-

Notifications

You must be signed in to change notification settings - Fork 20

Audiomaze

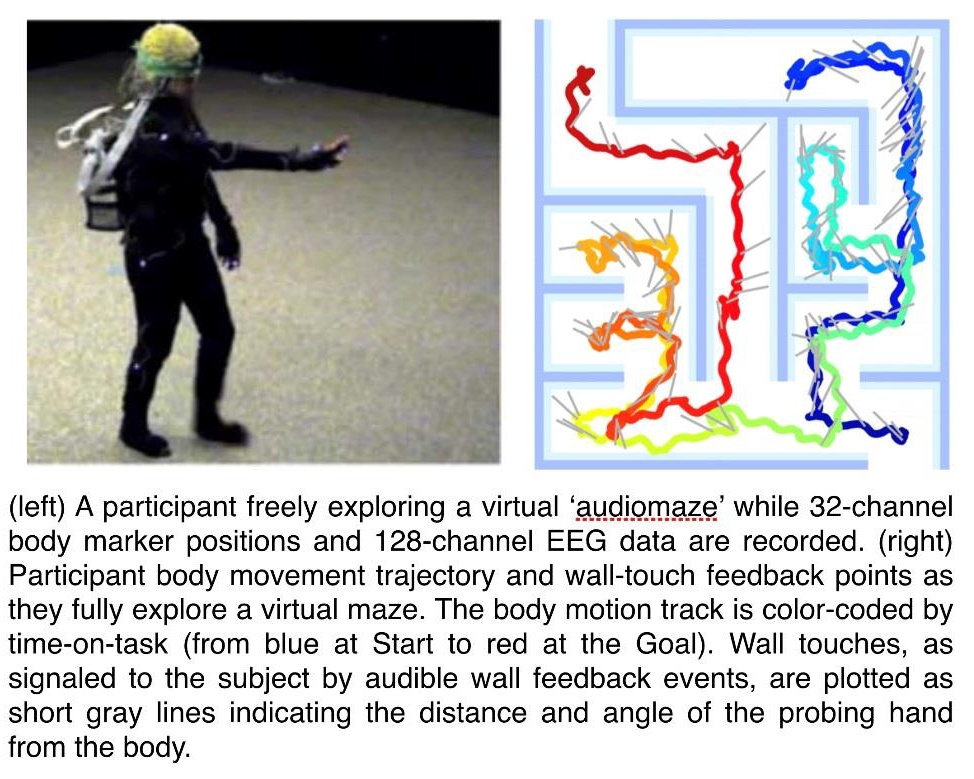

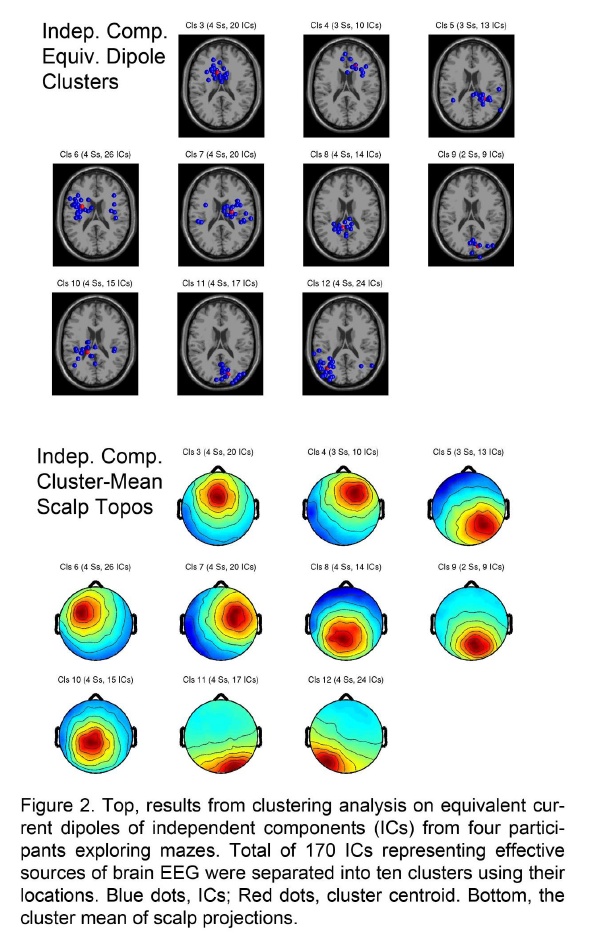

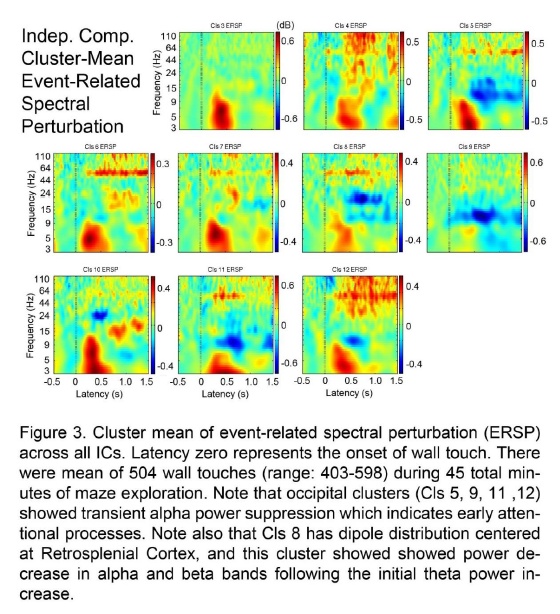

Audiomaze is a integrated mobile brain-body imaging (MoBI) paradigm to study human navigation and the corresponding brain electric activities during free, natural walking and exploring behavior through real space. Participant's task is to explore and learn the maze with a blindfold using the right hand as the only guide that can 'touch' walls (which returns sound feedback). During the task, her EEG is continuously recorded using completely portable 128-ch active EEG recording systems, and her body movement is recorded as well using 32-ch motion tracker system attached to head, torso, right arm and hand, and both knees and feet. This paradigm realizes our novel concept "Sparse AR" which is to control the flow of perceptual input, which is usually too fast, vast, and parallelized to be studied in cognitive neuroscience, to be slow, quantized, and sequentialized, as if the incoming information is transformed into "perceptual atoms" which we can study with EEG.

Download EEGLAB .set and .fdt files that contains 128-ch EEG plug 32-ch motion tracker data from one session of one participant. from here (total 332MB).

Download EEGLAB plugin to process the above data motionTrackerCorrectionTool1.00. To use this plugin, please follow the standard EEGLAB plugin procedure to use it i.e., unzipping it first, then locate it the folder under /(EEGLABroot)/eeglab/plugins and reboot EEGLAB. Under 'Tools' menu, there appears 'Motion Tracker Correction Tool'. The algorithm and the flow of the process is summarized in the PDF file linked below. Note that this is an old demo version from 2018.

Download the PDF file for description of the motion tracker data and correction methods from here (488KB).

Download movies demonstrating the effect of motion tracker correction: before correction (4.8MB) and after correction (3.8MB).

(Added 05/16/2020) Let me also upload the updated version of the processing pipeline. The difference from the above is that it is not wrapped up as a EEGLAB plugin but Matlab functions. For non-rigid-body marker correction, it uses neural network. updated preprocessing pipeline.

This project is funded by: Collaborative Research in Computational Neuroscience (CRCNS) NSF 1516107 US-German Research Proposal "Neural Dynamics of the Integration of Egocentric and Allocentric Cues in the Formation of Spatial Maps During Fully-Mobile Human Navigation" PI Scott Makeig. See NSF website A companion project is being funded by the Federal Ministry of Education and Research, Germany (BMBF). Swartz Center for Computational Neuroscience is supported by Swartz Foundation.

The current default option for 'handlejitterremoval' in load_xdf() is 'true'. The assumption for this is, I guess, that the data behaves well and the amount of jitter is trivial. However, I found that this is a dangerous assumption after experiencing with several MoBI projects. I recommend people check what they have in the raw data BEFORE applying linear regression on time stamp data. For detail, see this slide.