-

Notifications

You must be signed in to change notification settings - Fork 19.5k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Recursive models in keras #5160

Comments

|

It's not a cycle because you aren't running things infinitely. Also, what kind of merge? If you concat the input dim will increase for each loop so you can't use the same dense layer. Sum or another type of merge would be fine. The first time through the loop, there is nothing to merge, so you could start with initializing to zeros or ones depending on your type of merge. If you want to be fancy, you could try to use scan and other functions, but just defining the model in a loop is fine. i = Input(...)

o = zero(...)

d = Dense(512)

for j in depth:

m = merge([i,o], mode='sum')

o = d(m)Cheers |

|

You should almost never use while loops in theano or tensorflow, with few exceptions. Use scan, which is more like a for loop, and will run for a finite number of iterations. |

|

Hi @bstriner Thanks for the reply. |

|

@bstriner I think your example is not the true code up of what I intended. What you have made is something like this. This is completely different than what I want. |

|

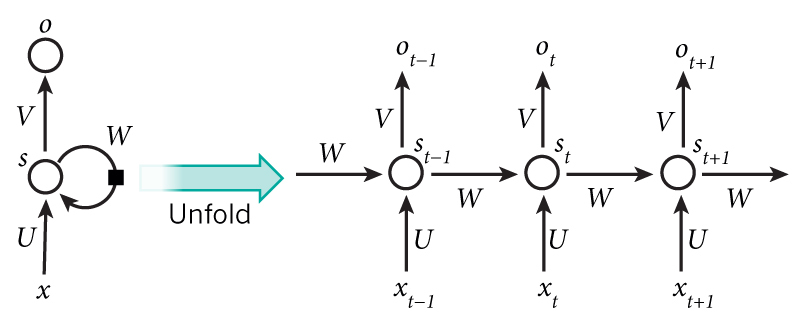

Based on the imprecision in the discussion so far I'm not convinced this is a feature request as per the guidelines. Let's find out. @ParthaEth it sounds like you want to build a custom RNN. Your block digram already looks somewhat like May i recommend that first you check if If it does not do what you want, perhaps you are looking at a novel type of recurrent network? The answer may still be simple: subclass it yourself. The Alternatively, you could make it precise here what exactly |

|

@danmackinlay Thanks for your interest. The first diagram is a toy diagram for what I want to do so it is simple to achieve with a SimpleRNN layer for example. But what I am attempting to achieve is to introduce a recurrence connection potentially multiple levels back. I think this is the only kind of model that can't be built in keras. I can try to draft such a functionality with either the subclassing idea or something else. Here is the actual model that I want to design it might look very cluttered but I have drawn it as simple as possible. |

|

Also @bstriner what did you mean by |

|

@ParthaEth In my example, it is reusing the dense layer. So your drawing is almost correct, except all of those dense layers are the same dense layer with shared weights. That's what recurrence is. For any recurrent network, try to draw the unrolled network, not the loop. This will always work unless you want infinite recursion, which you couldn't do anyways. I meant A Dense layer has a weight matrix with some number of input dimensions. You can't reuse that Dense layer on a different number of input dimensions. If you concatenate and each time through the loop the input dimension grows, you would have to have different dense layers. So if your merge is a sum, you could keep recurring on the same dense layer. If your merge is a concat, then you have to have several different dense layers, which is no longer recurrent. @danmackinlay I agree that an RNN, maybe with some modifications, is what he probably wants. I was explaining how to build an unrolled RNN manually, which would be easier than trying to explain how scan works. Cheers |

|

I think I have found a way. For verification and for those who would come back to this later. here is a simple code that creates a loop in the model. i.e. feeds back a later stage's output to a previous stage. `from keras.layers import Dense, Input input = Input(batch_shape=(2, 5), dtype='float32', name='current_pose_input') d1 = Dense(5) o = d1(input) d = Dense(5)(o) m = Model(input=input, output=d) from keras.utils.visualize_util import plot |

|

@ParthaEth the example you just posted? You're just reusing a layer, which is what I posted in my for loop. Since all of the layers have 5 output dims, there is no problem reusing layers. Your layer recurs 1 time, exactly as you coded it. You just coded an unrolled rnn! If you don't think about it as a loop, it is just this linear sequence.

If you wrote a for loop to go through that sequence, you would have multiple recurrences. o = input

for i in depth:

o=d1(o)

o=d2(o)

o=d3(o)

o = d(o) |

|

(The image looks like an infinite loop but remember your code is only looping once.) |

|

I think this is a wrong drawing though. Because this is what is different from an unfolded RNN here. In case of rnns as shown in @bstriner 's figure the neurons at time |

|

Notice the input into the second |

|

You almost have it. Just pass the input to dense1 if you want it to have that input. This is how you could write an RNN completely unrolled. input = ...

o = zero(...)

for i in depth:

h = merge([input, o]...)

o = d(h)The hidden state needs to be initialized to something like 0s. Pass the hidden and the input to the dense layer. Do that in a loop. Problem solved. |

|

@bstriner Perfect but that leaves two problems.

Consider the following drawing. A simple higher level unroll doesn't get us an RNN with all the layers spanned by the feedback as one layer of an RNN. So we have to consider providing support for feed back connection. The unrolling is too difficult to be done manually as it requires all the elements in the intermediate layers to be unrolled too. |

|

Not sure if there is a way to automatically draw the unrolled network. Might be worthwhile PR. If you want something other than zeros, do something else in your loop.

As @danmackinlay mentioned, you could take a look as SimpleRNN and K.rnn to see how Keras does other backends. There are two ways it can make RNNs:

Both ways will give you the same network, with maybe slightly better performance using scan, but performance isn't your issue right now. If eventually you wanted to try implementing a custom RNN layer to do what you want to do, that is also a possibility. You end up having most of your network within a single layer. It might perform a little better but just writing out the unrolled network is going to be easier. Regarding the LSTM that is part of your model, you could build a one-layer lstm out of some dense layers. Unrolling some weird custom network takes some time, but if ML was easy, everyone would do it. |

|

Glad that I could convey my point. I hope you see exactly why it is necessary to have a automatic mechanism for unrolling cause otherwise every one has to unroll their network every time he/she wants to have a feed back. I will try to understand and implement a code that can unroll automatically. I think it is not difficult if there are no recurrent layer in the loop. i.e. the simple for loop already does the job. But as soon as the are LSTM kind of layers with in the feed back loop things get a bit messy. It would be great if I am not the only one trying to tackle this daunting task. Lets form a team with ai-on.org but if ML was easy, everyone would do it..... wasn't it the spirit of keras? A library that makes deep learning so easy that everyone can do it. @fchollet May be take a look at the above discussion |

|

What do you mean by unrolling automatically? I'm trying to imagine what that would look like. If the model you're imagining has loops through time then it isn't a valid model. If You want to be able to build a completely arbitrary graph, sometimes that involves thinking of a for loop or using K.rnn. If you don't like the for loop idea, go straight for K.rnn. You need to write a step function that is each step through time. Now, one thing to consider:

Cheers |

|

@bstriner I don't think I understand why can't we train a network which has time loop. Every RNN structure has time loops. Look at the upper (right most) diagram in this again. input = Input(batch_shape=(2, 5), dtype='float32', name='current_pose_input')

f1 = Feedback(initial_tensor=Zeros(dim))

o = LSTM(10, f1)

o = TimeDistributed(Dense()(o))

f1(o)

m = Model(input=input, output=d)

m.compile('rmsprop', 'mse')Now all we have to do in the

|

|

RNNs have loops through time where t+1 depends on t. If you're imagining something where t+1 depends on t and t depends on t+1, then you've got something else. Being able to unroll something is key. If you can't draw your network as an acyclic graph then it is not a valid network You're welcome to try to make something like the Feedback layer but I don't see how it would work. It would have to have calculated everything before calculating anything. If you can't get the feedback layer to work, either:

|

|

I think @bstriner is right that we don't want to conflate the directed acyclic graph model which we use in RNN training with a hypothetical machinery to train arbitrary graphs. Nonetheless, pedantry compels me to mention that there are valid networks that possess cycles, although not networks in the sense we assume usually in keras - e.g. Boltzmann machines are represented by undirected graphs. Look up "loopy belief propagation" for one inference algorithms for those, which is not unrelated to the backprop that we use on typical directed acyclic graphs in that it is a message-passing algorithm. Nonetheless, that formalism is not quite the same as the keras RNN, which can be unrolled into an acyclic graph. and note that keras inherits from Theano/Tensorflow a restriction to directed acyclic graphs. graphs with cycle will not compile. @ParthaEth could probably train graphs with cycles using keras, by some cunning trick or other, but without claiming expertise in this area, I suspect (?) the method might look rather different than the current RNN implementation, so we shouldn't confuse these problems with one another. Check out the Koller and Friedman book for some algorithms there; and let us know if you implement anything spectacular. |

|

@bstriner and @danmackinlay I think both of you got my point wrong. So here is a second trial. I am not trying to make a cyclic graph in the sense of loopy belief propagation. I am just trying to make an RNN where the feed back jumps several layers back. Computation of time Now what I am arguing for is to provide a mechanism where an user of keras doesn't need to write the time unrolled version himself. Much like for an simple RNN you won't ask one to use a Dense layer and build his own time unrolled version but have a layer called |

|

@ParthaEth I get what you want, but you want Keras to do magic so you don't have to write a for loop. The model you want is acyclic, but you want Keras to support writing models in a cyclical style and magically unrolling them. In the example, you apply a TimeDistributed(Dense) before the input has been calculated. If you have any ideas about how you would implement this, then that is a useful discussion. I just don't think it can be done efficiently without tossing most of Keras. You would need every layer to support magical tensor placeholders for values that haven't been calculated yet. If you think unrolling is ugly, then post some more of your code and we'll figure out how to clean it up. You are also completely ignoring performance. Using customized calls to K.rnn is going to perform the best. That is why custom RNN layers exist. There is often a tradeoff between things that are easy to use and things that are efficient. So:

The reason I keep telling you to try the for loop, is because that is something you can do yourself quickly. We're having this entire discussion before we even know if your model works. If you make a model that works and improves on the state of the art, then you can get people to contribute to making it easier to write. If your model has the same performance as just a single LSTM, then we didn't waste a bunch of time trying to modify keras just to support that model. If you write something in Keras that does something useful, then we can talk about how to make it easier to write. So, if you get it working with the for loop, show that it does something useful but takes a lot of code to write, then people would be much more willing to help make it more efficient. Best of luck, |

|

@bstriner Perfect, great that you understand exactly my intention. Now, It is not a very new imaginative network that I am trying to implement and am expecting wild performance improvement. It is just the model used by this paper "A Recurrent Latent Variable Model for Sequential Data" It looks like the model I drawn in the yellow paper earlier. Sorry that I have not mentioned it earlier. There is an implementation in of this network in Torch and it performs quite well. But in our lab we are actually moving to TensorFlow and since keras is so nice I gave it a try, but now I am stuck with this. Hope I answer the question why we might think of trying to make RNN implementations easy. Finally it is not only about making this particular architecture easy, it in general would make new RNNs easy. Do have a look at this, I believe @farizrahman4u is already doing such stuff. What about porting them to keras? Also have a look at this discussion |

|

@ParthaEth and @farizrahman4u here is the real fundamental issue with Keras that we've been skirting around. Layers like def call(self, x):

self.call_on_params(x, *self.params)

def call_on_params(self, x, W, b):

...If we had something like that, we could use ordinary layers within RNNs. It would also let us do really advanced stuff like unrolled GANs and backtracking. However, that is a relatively large change we would have to be very careful with. If we want to build an entirely new module of keras for making RNN building blocks, that might be reasonable. For something entirely new like that, start a discussion over on contrib. https://github.com/farizrahman4u/keras-contrib @ParthaEth Why don't you just try recurrentshop? If it works well, then you have your solution. If it doesn't work, then you'll see what's missing and we can make something better. |

|

@bstriner I guess RecurrentShop will be able to do what I want for the time being. I'll give it a try. I am not sure if it already makes GANs feasible though. I do not think I understand your first part about the One more thought I read that in case of TensorFlow back end every RNN is any ways rolled out, if so , then we need not really worry about performance and can just roll out any kind of RNN graph isn't it? |

|

I guess this is the place where we could roll out a time feed back. That way everything would sill be directed acyclic. About performance issue, - since in case of TF back end RNNs are rolled out any ways this should not be a bad deal. |

|

That TF documentation was really outdated. I changed it a few days ago. #5192 If you're working on GANs, there are a lot of things to keep in mind. I made a module to make things easier. You should be able to use this in combination with recurrentshop. https://github.com/bstriner/keras-adversarial It's not a huge difference between unrolled and a symbolic loop. If you make something with high accuracy that is a little slow, someone will help make it faster. So you could either unroll your models in a for loop, which gives you access to all Keras models and layers, or build a custom layer in recurrent shop. Recurrent shop will perform better but no idea if the difference is significant. The W = glorot_uniform((n,m))

b = zeros((m,))

def fun1(_x)

return K.dot(_x,W)+b

y1 = theano.scan(fun1, sequences=x)

def fun2(_x, _W, _b)

return K.dot(_x,_W)+_b

y2 = theano.scan(fun2, sequences=x, non_sequences=(W,b))Because Keras layers directly access parameters in call, there is no easy way to reuse them within a scan. @farizrahman4u gets around these issues by building a framework for making an arbitrarily complex recurrent network within a single layer.

|

|

@bstriner So how about this. When |

|

Is there a major flaw in the above idea? Cause this seems simple but somehow you guys seem not so excited about it. Am I over looking something big and troubling? |

|

It sounds like what we need is a sort of "scan" or "K.rnn" wrapper for layers. |

|

@lemuriandezapada Not really. I don't think we need to have that sophisticated functionality. Just like stateful we can make the full batch size mandatory and roll out the model in model constructor. |

|

Keras (and Tensorflow and Theano) aren't really set up for dynamic computation graphs. Check out something like DyNet or pyTorch instead, which don't have as big a gap between graph compilation and graph running. |

|

@ParthaEth does my comment in the seq2seq issue address your goal? As an aside: I'm currently also experimenting with recurrent autoencoders :) |

|

This issue has been automatically marked as stale because it has not had recent activity. It will be closed after 30 days if no further activity occurs, but feel free to re-open a closed issue if needed. |

|

@chmp I did not follow this thread for very long now. So I not sure if your suggestion solves this issue. Perhaps Manuel Kaufmann may have some ideas. |

|

Hey, but the error message tells me

If I had to guess, I'd think the reason for this is that x got a shape of (None, 1) and y's shape is (1,0).. I am still a little bit confused how to use the K.zeros(..)-command as an empty Tensor. PS: Using SimpleRNN sadly is not an option, since later on I want to replace the Dense layers with an already finished whole model :( |

|

Hi guys |

o = K.zeros(512)

d = Dense(512)

for j in range(5):

m = Add()([i, o])

o = d(m)

model = Model( inputs = i, outputs = o )

from keras.utils import plot_model

plot_model(m, to_file='model.png', show_shapes = True)

This is showing inbound nodes error. |

|

@ParthaEth and @bstriner I have create a very simple code and picture, refer to this link If you have a solution to this code using any Keras RNN would be greatly appreciated. |

Check that you are up-to-date with the master branch of Keras. You can update with:

pip install git+git://github.com/fchollet/keras.git --upgrade --no-deps

If running on TensorFlow, check that you are up-to-date with the latest version. The installation instructions can be found here.

Hi,

I was wondering if a model of the following kind is possible in keras. I am trying to implement a recurrent version of conditional variational auto encoder and would like to try some different models so in one case this cyclic nature arises. I simplified the whole model but his is the problem which I can't solve. i.e. can we have a cyclic graph in keras? Looks like a cycle is allowed in tensor-flow and theano by the methods tf.while_loop . What are your thoughts?

The text was updated successfully, but these errors were encountered: