diff --git a/annolid/gui/app.py b/annolid/gui/app.py

index daa1bb14..dd62798d 100644

--- a/annolid/gui/app.py

+++ b/annolid/gui/app.py

@@ -315,6 +315,14 @@ def __init__(self,

self.tr("Open video")

)

+ segment_cells = action(

+ self.tr("&Segment Cells"),

+ self._segment_cells,

+ None,

+ "Segment Cells",

+ self.tr("Segment Cells")

+ )

+

advance_params = action(

self.tr("&Advanced Parameters"),

self.set_advanced_params,

@@ -328,6 +336,12 @@ def __init__(self,

)

))

+ segment_cells.setIcon(QtGui.QIcon(

+ str(

+ self.here / "icons/cell_seg.png"

+ )

+ ))

+

open_audio = action(

self.tr("&Open Audio"),

self.openAudio,

@@ -488,6 +502,7 @@ def __init__(self,

_action_tools.append(quality_control)

_action_tools.append(colab)

_action_tools.append(visualization)

+ _action_tools.append(segment_cells)

self.actions.tool = tuple(_action_tools)

self.tools.clear()

@@ -502,6 +517,7 @@ def __init__(self,

utils.addActions(self.menus.file, (models,))

utils.addActions(self.menus.file, (tracks,))

utils.addActions(self.menus.file, (quality_control,))

+ utils.addActions(self.menus.file, (segment_cells,))

utils.addActions(self.menus.file, (downsample_video,))

utils.addActions(self.menus.file, (convert_sleap,))

utils.addActions(self.menus.file, (advance_params,))

@@ -991,6 +1007,46 @@ def _select_sam_model_name(self):

return model_name

+ def _segment_cells(self):

+ if self.filename or len(self.imageList) > 0:

+ from annolid.segmentation.MEDIAR.predict_ensemble import MEDIARPredictor

+ if self.annotation_dir is not None:

+ out_dir_path = self.annotation_dir + '_masks'

+ elif self.filename:

+ out_dir_path = str(Path(self.filename).with_suffix(''))

+ if not os.path.exists(out_dir_path):

+ os.makedirs(out_dir_path, exist_ok=True)

+

+ if self.filename is not None and self.annotation_dir is None:

+ self.annotation_dir = out_dir_path

+ target_link = os.path.join(

+ out_dir_path, os.path.basename(self.filename))

+ if not os.path.islink(target_link):

+ os.symlink(self.filename, target_link)

+ else:

+ logger.info(f"The symlink {target_link} alreay exists.")

+ mediar_predictor = MEDIARPredictor(input_path=self.annotation_dir,

+ output_path=out_dir_path)

+ self.worker = FlexibleWorker(

+ mediar_predictor.conduct_prediction)

+ self.thread = QtCore.QThread()

+ self.worker.moveToThread(self.thread)

+ self.worker.start.connect(self.worker.run)

+ self.worker.finished.connect(self.thread.quit)

+ self.worker.finished.connect(self.worker.deleteLater)

+ self.thread.finished.connect(self.thread.deleteLater)

+ self.worker.finished.connect(lambda:

+ QtWidgets.QMessageBox.about(self,

+ "Cell counting results are ready",

+ f"Please review your results."))

+ self.worker.return_value.connect(

+ lambda shape_list: self.loadShapes(shape_list))

+

+ self.thread.start()

+ self.worker.start.emit()

+ # shape_list = mediar_predictor.conduct_prediction()

+ # self.loadShapes(shape_list)

+

def stop_prediction(self):

# Emit the stop signal to signal the prediction thread to stop

self.pred_worker.stop()

@@ -1009,7 +1065,7 @@ def predict_from_next_frame(self,

if self.pred_worker and self.stop_prediction_flag:

# If prediction is running, stop the prediction

self.stop_prediction()

- elif len(self.canvas.shapes) <= 0:

+ elif len(self.canvas.shapes) <= 0 and self.video_file is not None:

QtWidgets.QMessageBox.about(self,

"No Shapes or Labeled Frames",

f"Please label this frame")

diff --git a/annolid/gui/icons/cell_seg.png b/annolid/gui/icons/cell_seg.png

new file mode 100644

index 00000000..5e116149

Binary files /dev/null and b/annolid/gui/icons/cell_seg.png differ

diff --git a/annolid/segmentation/MEDIAR/.gitignore b/annolid/segmentation/MEDIAR/.gitignore

new file mode 100644

index 00000000..c76d2755

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/.gitignore

@@ -0,0 +1,18 @@

+config/

+*.log

+*.ipynb

+*.ipynb_checkpoints/

+__pycache__/

+results/

+weights/

+wandb/

+data/

+submissions/

+/.vscode

+*.npy

+*.pth

+*.sh

+*.json

+*.out

+*.zip

+*.tiff

diff --git a/annolid/segmentation/MEDIAR/LICENSE b/annolid/segmentation/MEDIAR/LICENSE

new file mode 100644

index 00000000..2161ee79

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/LICENSE

@@ -0,0 +1,21 @@

+MIT License

+

+Copyright (c) 2022 opcrisis

+

+Permission is hereby granted, free of charge, to any person obtaining a copy

+of this software and associated documentation files (the "Software"), to deal

+in the Software without restriction, including without limitation the rights

+to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

+copies of the Software, and to permit persons to whom the Software is

+furnished to do so, subject to the following conditions:

+

+The above copyright notice and this permission notice shall be included in all

+copies or substantial portions of the Software.

+

+THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

+IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

+FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

+AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

+LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

+OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

+SOFTWARE.

diff --git a/annolid/segmentation/MEDIAR/README.md b/annolid/segmentation/MEDIAR/README.md

new file mode 100644

index 00000000..af287217

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/README.md

@@ -0,0 +1,189 @@

+

+# **MEDIAR: Harmony of Data-Centric and Model-Centric for Multi-Modality Microscopy**

+

+

+

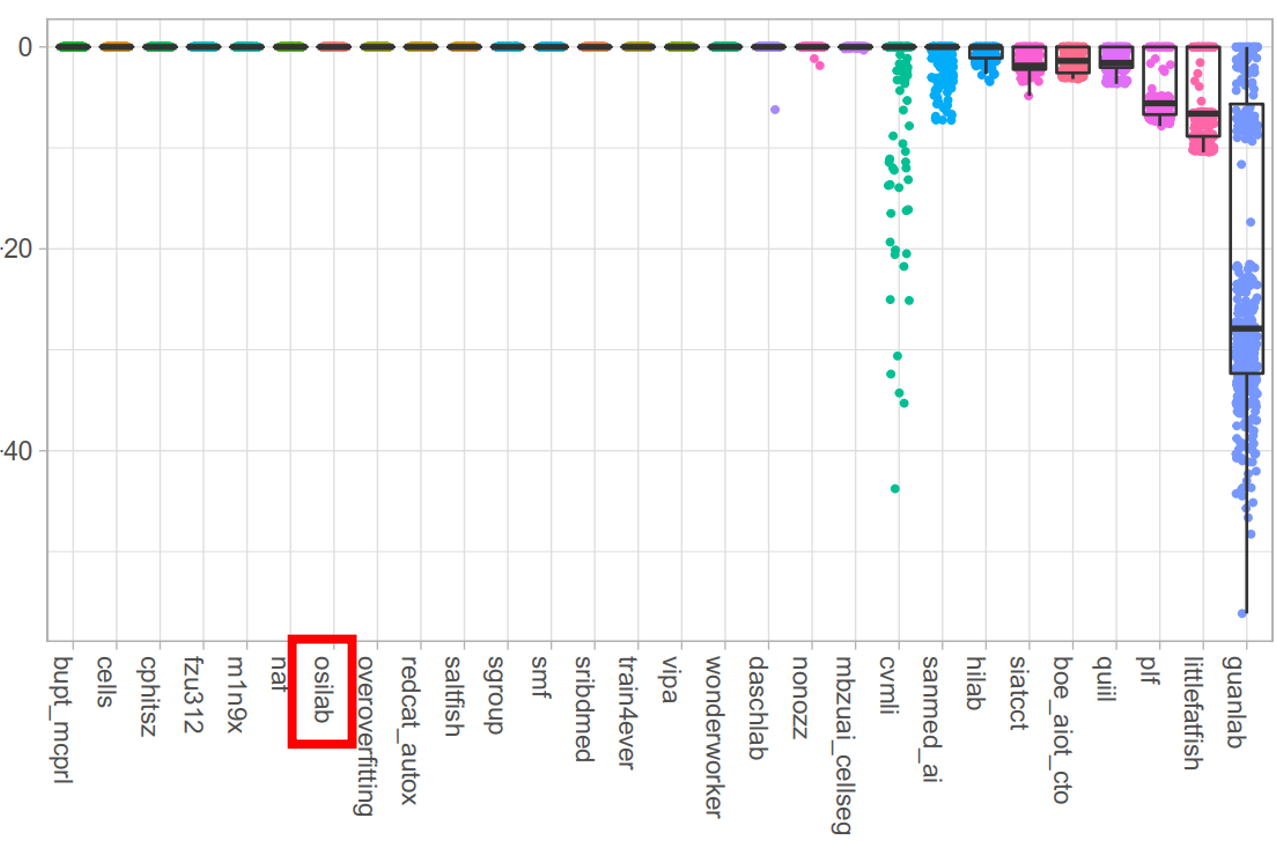

+This repository provides an official implementation of [MEDIAR: MEDIAR: Harmony of Data-Centric and Model-Centric for Multi-Modality Microscopy](https://arxiv.org/abs/2212.03465), which achieved the ***"1st winner"*** in the [NeurIPS-2022 Cell Segmentation Challenge](https://neurips22-cellseg.grand-challenge.org/).

+

+To access and try mediar directly, please see links below.

+-  +- [Huggingface Space](https://huggingface.co/spaces/ghlee94/MEDIAR?logs=build)

+- [Napari Plugin](https://github.com/joonkeekim/mediar-napari)

+- [Docker Image](https://hub.docker.com/repository/docker/joonkeekim/mediar/general)

+

+# 1. MEDIAR Overview

+

+- [Huggingface Space](https://huggingface.co/spaces/ghlee94/MEDIAR?logs=build)

+- [Napari Plugin](https://github.com/joonkeekim/mediar-napari)

+- [Docker Image](https://hub.docker.com/repository/docker/joonkeekim/mediar/general)

+

+# 1. MEDIAR Overview

+ +

+MEIDAR is a framework for efficient cell instance segmentation of multi-modality microscopy images. The above figure illustrates an overview of our approach. MEDIAR harmonizes data-centric and model-centric approaches as the learning and inference strategies, achieving a **0.9067** Mean F1-score on the validation datasets. We provide a brief description of methods that combined in the MEDIAR. Please refer to our paper for more information.

+# 2. Methods

+

+## **Data-Centric**

+- **Cell Aware Augmentation** : We apply two novel cell-aware augmentations. *cell-wisely intensity is randomization* (Cell Intensity Diversification) and *cell-wise boundary pixels exclusion* in the label. The boundary exclusion is adopted only in the pre-training phase.

+

+- **Two-phase Pretraining and Fine-tuning** : To extract knowledge from large public datasets, we first pretrained our model on public sets, then fine-tune.

+ - Pretraining : We use 7,2412 labeled images from four public datasets for pretraining: OmniPose, CellPose, LiveCell and DataScienceBowl-2018. MEDIAR takes two different phases for the pretraining. the MEDIAR-Former model with encoder parameters initialized from ImageNet-1k pretraining.

+

+ - Fine-tuning : We use two different model for ensemble. First model is fine-tuned 200 epochs using target datasets. Second model is fine-tuned 25 epochs using both target and public datsets.

+

+- **Modality Discovery & Amplified Sampling** : To balance towards the latent modalities in the datasets, we conduct K-means clustering and discover 40 modalities. In the training phase, we over-sample the minor cluster samples.

+

+- **Cell Memory Replay** : We concatenate the data from the public dataset with a small portion to the batch and train with boundary-excluded labels.

+

+## **Model-Centric**

+- **MEDIAR-Former Architecture** : MEDIAR-Former follows the design paradigm of U-Net, but use SegFormer and MA-Net for the encoder and decoder. The two heads of MEDIAR-Former predicts cell probability and gradieng flow.

+

+

+

+MEIDAR is a framework for efficient cell instance segmentation of multi-modality microscopy images. The above figure illustrates an overview of our approach. MEDIAR harmonizes data-centric and model-centric approaches as the learning and inference strategies, achieving a **0.9067** Mean F1-score on the validation datasets. We provide a brief description of methods that combined in the MEDIAR. Please refer to our paper for more information.

+# 2. Methods

+

+## **Data-Centric**

+- **Cell Aware Augmentation** : We apply two novel cell-aware augmentations. *cell-wisely intensity is randomization* (Cell Intensity Diversification) and *cell-wise boundary pixels exclusion* in the label. The boundary exclusion is adopted only in the pre-training phase.

+

+- **Two-phase Pretraining and Fine-tuning** : To extract knowledge from large public datasets, we first pretrained our model on public sets, then fine-tune.

+ - Pretraining : We use 7,2412 labeled images from four public datasets for pretraining: OmniPose, CellPose, LiveCell and DataScienceBowl-2018. MEDIAR takes two different phases for the pretraining. the MEDIAR-Former model with encoder parameters initialized from ImageNet-1k pretraining.

+

+ - Fine-tuning : We use two different model for ensemble. First model is fine-tuned 200 epochs using target datasets. Second model is fine-tuned 25 epochs using both target and public datsets.

+

+- **Modality Discovery & Amplified Sampling** : To balance towards the latent modalities in the datasets, we conduct K-means clustering and discover 40 modalities. In the training phase, we over-sample the minor cluster samples.

+

+- **Cell Memory Replay** : We concatenate the data from the public dataset with a small portion to the batch and train with boundary-excluded labels.

+

+## **Model-Centric**

+- **MEDIAR-Former Architecture** : MEDIAR-Former follows the design paradigm of U-Net, but use SegFormer and MA-Net for the encoder and decoder. The two heads of MEDIAR-Former predicts cell probability and gradieng flow.

+

+ +

+- **Gradient Flow Tracking** : We utilize gradient flow tracking proposed by [CellPose](https://github.com/MouseLand/cellpose).

+

+- **Ensemble with Stochastic TTA**: During the inference, the MEIDAR conduct prediction as sliding-window manner with importance map generated by the gaussian filter. We use two fine-tuned models from phase1 and phase2 pretraining, and ensemble their outputs by summation. For each outputs, test-time augmentation is used.

+# 3. Experiments

+

+### **Dataset**

+- Official Dataset

+ - We are provided the target dataset from [Weakly Supervised Cell Segmentation in Multi-modality High-Resolution Microscopy Images](https://neurips22-cellseg.grand-challenge.org/). It consists of 1,000 labeled images, 1,712 unlabeled images and 13 unlabeled whole slide image from various microscopy types, tissue types, and staining types. Validation set is given with 101 images including 1 whole slide image.

+

+- Public Dataset

+ - [OmniPose](http://www.cellpose.org/dataset_omnipose) : contains mixtures of 14 bacterial species. We only use 611 bacterial cell microscopy images and discard 118 worm images.

+ - [CellPose](https://www.cellpose.org/dataset) : includes Cytoplasm, cellular microscopy, fluorescent cells images. We used 551 images by discarding 58 non-microscopy images. We convert all images as gray-scale.

+ - [LiveCell](https://github.com/sartorius-research/LIVECell) : is a large-scale dataset with 5,239 images containing 1,686,352 individual cells annotated by trained crowdsources from 8 distinct cell types.

+ - [DataScienceBowl 2018](https://www.kaggle.com/competitions/sartorius-cell-instance-segmentation/overview) : 841 images contain 37,333 cells from 22 cell types, 15 image resolutions, and five visually similar groups.

+

+### **Testing steps**

+- **Ensemble Prediction with TTA** : MEDIAR uses sliding-window inference with the overlap size between the adjacent patches as 0.6 and gaussian importance map. To predict the different views on the image, MEDIAR uses Test-Time Augmentation (TTA) for the model prediction and ensemble two models described in **Two-phase Pretraining and Fine-tuning**.

+

+- **Inference time** : MEDIAR conducts most images in less than 1sec and it depends on the image size and the number of cells, even with ensemble prediction with TTA. Detailed evaluation-time results are in the paper.

+

+### **Preprocessing & Augmentations**

+| Strategy | Type | Probability |

+|----------|:-------------|------|

+| `Clip` | Pre-processing | . |

+| `Normalization` | Pre-processing | . |

+| `Scale Intensity` | Pre-processing | . |

+| `Zoom` | Spatial Augmentation | 0.5 |

+| `Spatial Crop` | Spatial Augmentation | 1.0 |

+| `Axis Flip` | Spatial Augmentation | 0.5 |

+| `Rotation` | Spatial Augmentation | 0.5 |

+| `Cell-Aware Intensity` | Intensity Augmentation | 0.25 |

+| `Gaussian Noise` | Intensity Augmentation | 0.25 |

+| `Contrast Adjustment` | Intensity Augmentation | 0.25 |

+| `Gaussian Smoothing` | Intensity Augmentation | 0.25 |

+| `Histogram Shift` | Intensity Augmentation | 0.25 |

+| `Gaussian Sharpening` | Intensity Augmentation | 0.25 |

+| `Boundary Exclusion` | Others | . |

+

+

+| Learning Setups | Pretraining | Fine-tuning |

+|----------------------------------------------------------------------|---------------------------------------------------------|---------------------------------------------------------|

+| Initialization (Encoder) | Imagenet-1k pretrained | from Pretraining |

+| Initialization (Decoder, Head) | He normal initialization | from Pretraining|

+| Batch size | 9 | 9 |

+| Total epochs | 80 (60) | 200 (25) |

+| Optimizer | AdamW | AdamW |

+| Initial learning rate (lr) | 5e-5 | 2e-5 |

+| Lr decay schedule | Cosine scheduler (100 interval) | Cosine scheduler (100 interval) |

+| Loss function | MSE, BCE | MSE, BCE |

+

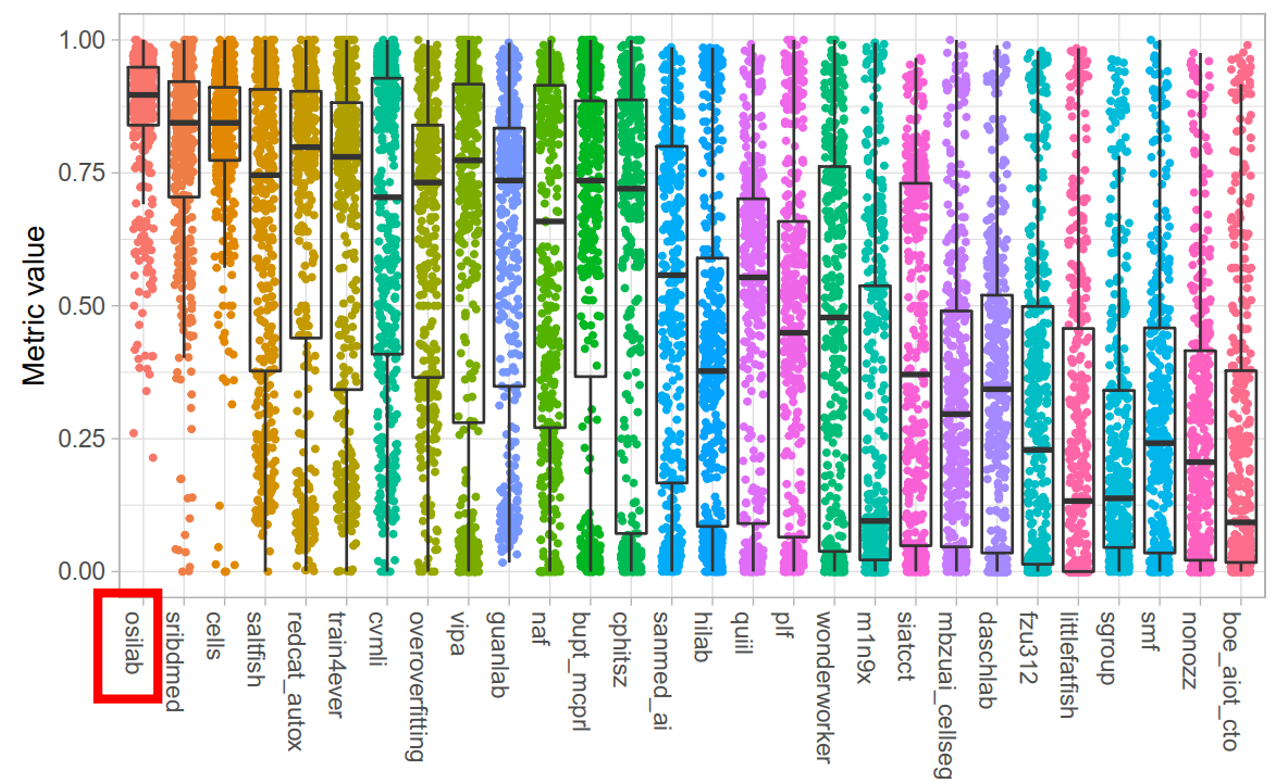

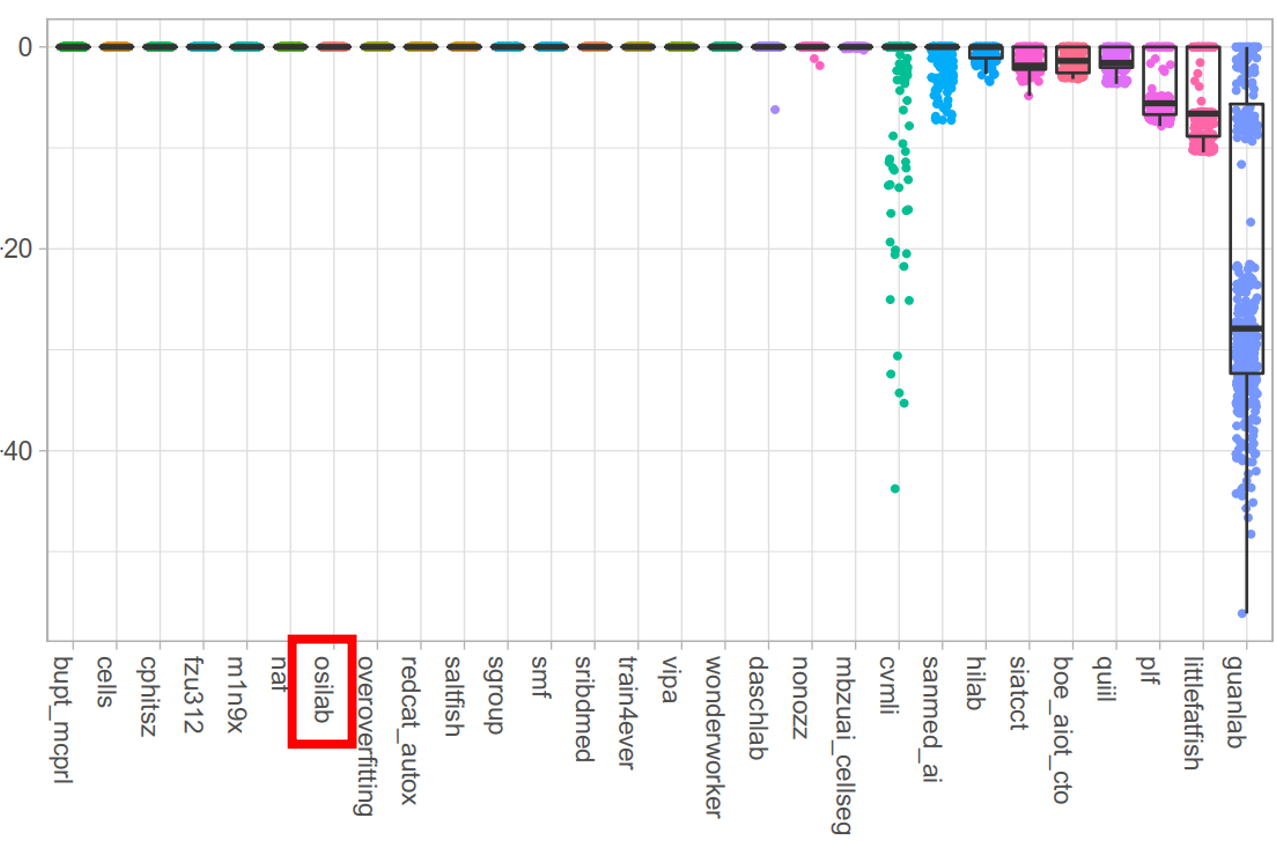

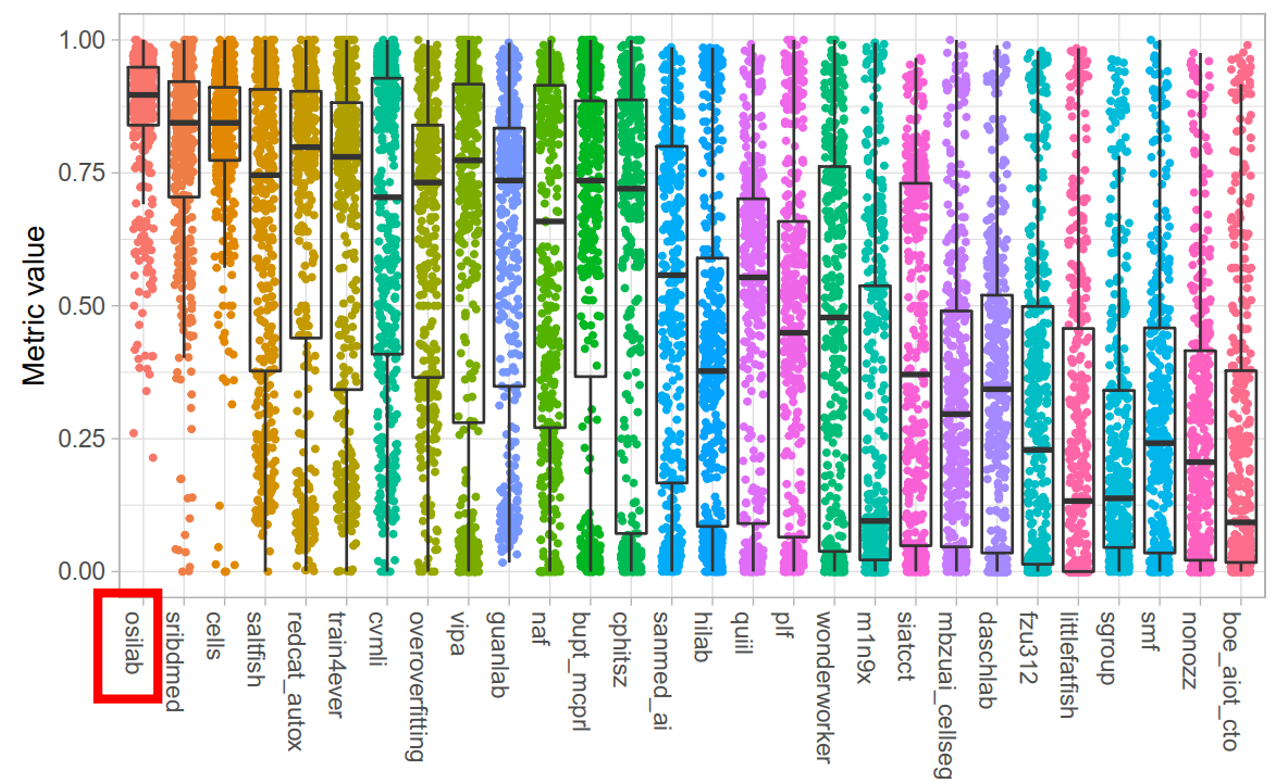

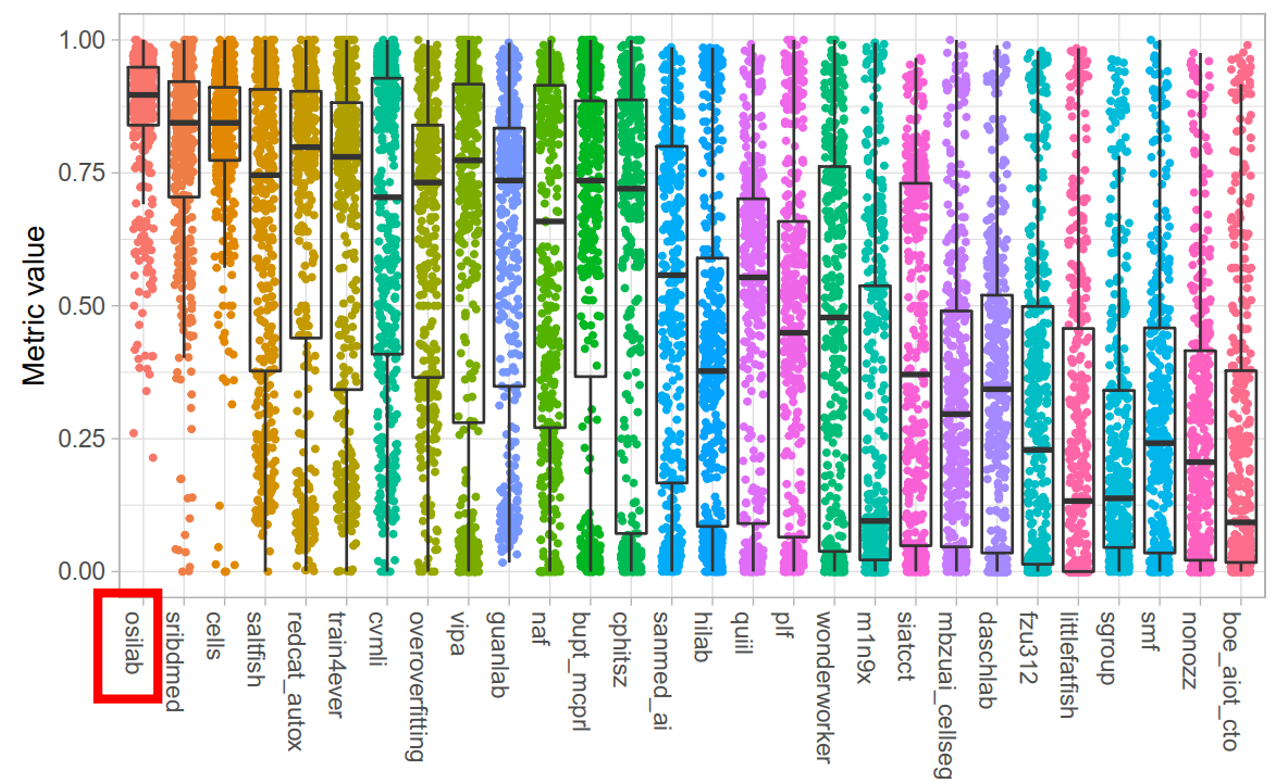

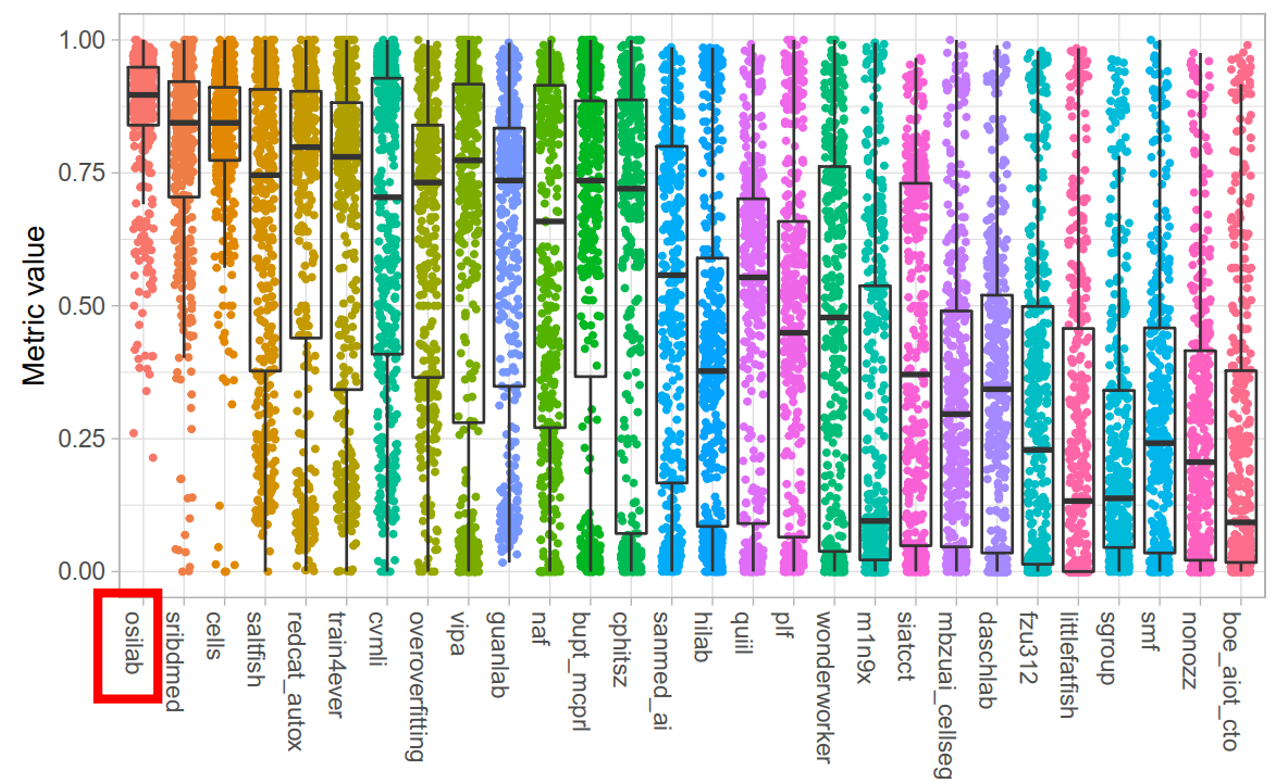

+# 4. Results

+### **Validation Dataset**

+- Quantitative Evaluation

+ - Our MEDIAR achieved **0.9067** validation mean F1-score.

+- Qualitative Evaluation

+

+

+- **Gradient Flow Tracking** : We utilize gradient flow tracking proposed by [CellPose](https://github.com/MouseLand/cellpose).

+

+- **Ensemble with Stochastic TTA**: During the inference, the MEIDAR conduct prediction as sliding-window manner with importance map generated by the gaussian filter. We use two fine-tuned models from phase1 and phase2 pretraining, and ensemble their outputs by summation. For each outputs, test-time augmentation is used.

+# 3. Experiments

+

+### **Dataset**

+- Official Dataset

+ - We are provided the target dataset from [Weakly Supervised Cell Segmentation in Multi-modality High-Resolution Microscopy Images](https://neurips22-cellseg.grand-challenge.org/). It consists of 1,000 labeled images, 1,712 unlabeled images and 13 unlabeled whole slide image from various microscopy types, tissue types, and staining types. Validation set is given with 101 images including 1 whole slide image.

+

+- Public Dataset

+ - [OmniPose](http://www.cellpose.org/dataset_omnipose) : contains mixtures of 14 bacterial species. We only use 611 bacterial cell microscopy images and discard 118 worm images.

+ - [CellPose](https://www.cellpose.org/dataset) : includes Cytoplasm, cellular microscopy, fluorescent cells images. We used 551 images by discarding 58 non-microscopy images. We convert all images as gray-scale.

+ - [LiveCell](https://github.com/sartorius-research/LIVECell) : is a large-scale dataset with 5,239 images containing 1,686,352 individual cells annotated by trained crowdsources from 8 distinct cell types.

+ - [DataScienceBowl 2018](https://www.kaggle.com/competitions/sartorius-cell-instance-segmentation/overview) : 841 images contain 37,333 cells from 22 cell types, 15 image resolutions, and five visually similar groups.

+

+### **Testing steps**

+- **Ensemble Prediction with TTA** : MEDIAR uses sliding-window inference with the overlap size between the adjacent patches as 0.6 and gaussian importance map. To predict the different views on the image, MEDIAR uses Test-Time Augmentation (TTA) for the model prediction and ensemble two models described in **Two-phase Pretraining and Fine-tuning**.

+

+- **Inference time** : MEDIAR conducts most images in less than 1sec and it depends on the image size and the number of cells, even with ensemble prediction with TTA. Detailed evaluation-time results are in the paper.

+

+### **Preprocessing & Augmentations**

+| Strategy | Type | Probability |

+|----------|:-------------|------|

+| `Clip` | Pre-processing | . |

+| `Normalization` | Pre-processing | . |

+| `Scale Intensity` | Pre-processing | . |

+| `Zoom` | Spatial Augmentation | 0.5 |

+| `Spatial Crop` | Spatial Augmentation | 1.0 |

+| `Axis Flip` | Spatial Augmentation | 0.5 |

+| `Rotation` | Spatial Augmentation | 0.5 |

+| `Cell-Aware Intensity` | Intensity Augmentation | 0.25 |

+| `Gaussian Noise` | Intensity Augmentation | 0.25 |

+| `Contrast Adjustment` | Intensity Augmentation | 0.25 |

+| `Gaussian Smoothing` | Intensity Augmentation | 0.25 |

+| `Histogram Shift` | Intensity Augmentation | 0.25 |

+| `Gaussian Sharpening` | Intensity Augmentation | 0.25 |

+| `Boundary Exclusion` | Others | . |

+

+

+| Learning Setups | Pretraining | Fine-tuning |

+|----------------------------------------------------------------------|---------------------------------------------------------|---------------------------------------------------------|

+| Initialization (Encoder) | Imagenet-1k pretrained | from Pretraining |

+| Initialization (Decoder, Head) | He normal initialization | from Pretraining|

+| Batch size | 9 | 9 |

+| Total epochs | 80 (60) | 200 (25) |

+| Optimizer | AdamW | AdamW |

+| Initial learning rate (lr) | 5e-5 | 2e-5 |

+| Lr decay schedule | Cosine scheduler (100 interval) | Cosine scheduler (100 interval) |

+| Loss function | MSE, BCE | MSE, BCE |

+

+# 4. Results

+### **Validation Dataset**

+- Quantitative Evaluation

+ - Our MEDIAR achieved **0.9067** validation mean F1-score.

+- Qualitative Evaluation

+ +

+- Failure Cases

+

+

+- Failure Cases

+ +

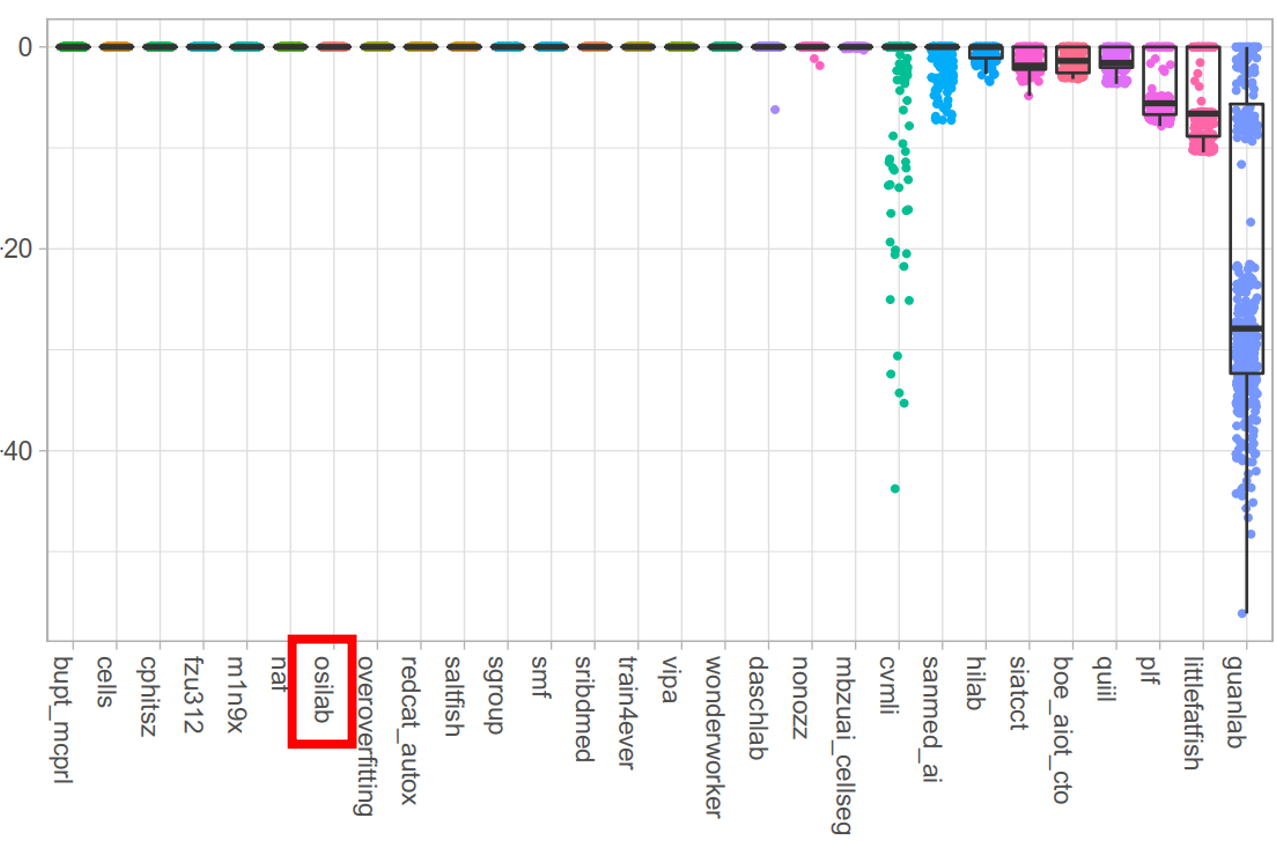

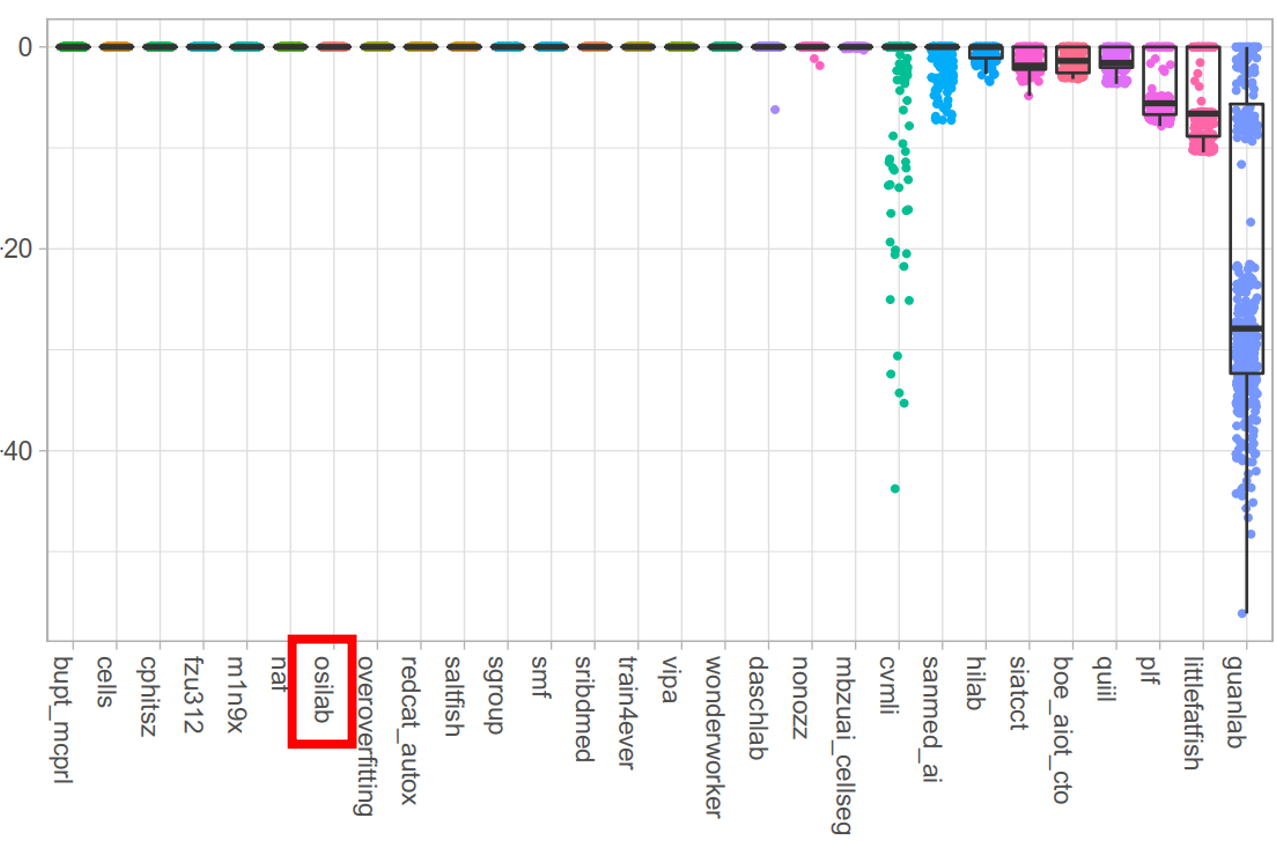

+### **Test Dataset**

+

+

+

+

+# 5. Reproducing

+

+### **Our Environment**

+| Computing Infrastructure| |

+|-------------------------|----------------------------------------------------------------------|

+| System | Ubuntu 18.04.5 LTS |

+| CPU | AMD EPYC 7543 32-Core Processor CPU@2.26GHz |

+| RAM | 500GB; 3.125MT/s |

+| GPU (number and type) | NVIDIA A5000 (24GB) 2ea |

+| CUDA version | 11.7 |

+| Programming language | Python 3.9 |

+| Deep learning framework | Pytorch (v1.12, with torchvision v0.13.1) |

+| Code dependencies | MONAI (v0.9.0), Segmentation Models (v0.3.0) |

+| Specific dependencies | None |

+

+To install requirements:

+

+```

+pip install -r requirements.txt

+wandb off

+```

+

+## Dataset

+- The datasets directories under the root should the following structure:

+

+```

+ Root

+ ├── Datasets

+ │ ├── images (images can have various extensions: .tif, .tiff, .png, .bmp ...)

+ │ │ ├── cell_00001.png

+ │ │ ├── cell_00002.tif

+ │ │ ├── cell_00003.xxx

+ │ │ ├── ...

+ │ └── labels (labels must have .tiff extension.)

+ │ │ ├── cell_00001_label.tiff

+ │ │ ├── cell_00002.label.tiff

+ │ │ ├── cell_00003.label.tiff

+ │ │ ├── ...

+ └── ...

+```

+

+Before execute the codes, run the follwing code to generate path mappting json file:

+

+```python

+python ./generate_mapping.py --root=

+```

+

+## Training

+

+To train the model(s) in the paper, run the following command:

+

+```python

+python ./main.py --config_path=

+```

+Configuration files are in `./config/*`. We provide the pretraining, fine-tuning, and prediction configs. You can refer to the configuration options in the `./config/mediar_example.json`. We also implemented the official challenge baseline code in our framework. You can run the baseline code by running the `./config/baseline.json`.

+

+## Inference

+

+To conduct prediction on the testing cases, run the following command:

+

+```python

+python predict.py --config_path=

+```

+

+## Evaluation

+If you have the labels run the following command for evaluation:

+

+```python

+python ./evaluate.py --pred_path= --gt_path=

+```

+

+The configuration files for `predict.py` is slightly different. Please refer to the config files in `./config/step3_prediction/*`.

+## Trained Models

+

+You can download MEDIAR pretrained and finetuned models here:

+

+- [Google Drive Link](https://drive.google.com/drive/folders/1RgMxHIT7WsKNjir3wXSl7BrzlpS05S18?usp=share_link).

+

+## Citation of this Work

+```

+@article{lee2022mediar,

+ title={Mediar: Harmony of data-centric and model-centric for multi-modality microscopy},

+ author={Lee, Gihun and Kim, SangMook and Kim, Joonkee and Yun, Se-Young},

+ journal={arXiv preprint arXiv:2212.03465},

+ year={2022}

+}

+```

+

diff --git a/annolid/segmentation/MEDIAR/SetupDict.py b/annolid/segmentation/MEDIAR/SetupDict.py

new file mode 100644

index 00000000..d9b7aebd

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/SetupDict.py

@@ -0,0 +1,39 @@

+import torch.optim as optim

+import torch.optim.lr_scheduler as lr_scheduler

+import monai

+

+from annolid.segmentation.MEDIAR import core

+from annolid.segmentation.MEDIAR.train_tools import models

+from annolid.segmentation.MEDIAR.train_tools.models import *

+

+__all__ = ["TRAINER", "OPTIMIZER", "SCHEDULER"]

+

+TRAINER = {

+ "baseline": core.Baseline.Trainer,

+ "mediar": core.MEDIAR.Trainer,

+}

+

+PREDICTOR = {

+ "baseline": core.Baseline.Predictor,

+ "mediar": core.MEDIAR.Predictor,

+ "ensemble_mediar": core.MEDIAR.EnsemblePredictor,

+}

+

+MODELS = {

+ "unet": monai.networks.nets.UNet,

+ "unetr": monai.networks.nets.unetr.UNETR,

+ "swinunetr": monai.networks.nets.SwinUNETR,

+ "mediar-former": models.MEDIARFormer,

+}

+

+OPTIMIZER = {

+ "sgd": optim.SGD,

+ "adam": optim.Adam,

+ "adamw": optim.AdamW,

+}

+

+SCHEDULER = {

+ "step": lr_scheduler.StepLR,

+ "multistep": lr_scheduler.MultiStepLR,

+ "cosine": lr_scheduler.CosineAnnealingLR,

+}

diff --git a/annolid/segmentation/MEDIAR/__init__.py b/annolid/segmentation/MEDIAR/__init__.py

new file mode 100644

index 00000000..e69de29b

diff --git a/annolid/segmentation/MEDIAR/config/baseline.json b/annolid/segmentation/MEDIAR/config/baseline.json

new file mode 100644

index 00000000..186910a4

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/config/baseline.json

@@ -0,0 +1,60 @@

+{

+ "data_setups":{

+ "labeled":{

+ "root": "/home/gihun/data/CellSeg/",

+ "mapping_file": "./train_tools/data_utils/mapping_labeled.json",

+ "tuning_mapping_file": "/home/gihun/CellSeg/train_tools/data_utils/mapping_tuning.json",

+ "batch_size": 8,

+ "valid_portion": 0.1

+ },

+ "unlabeled":{

+ "enabled": false

+ },

+ "public":{

+ "enabled": false

+ }

+ },

+ "train_setups":{

+ "model":{

+ "name": "swinunetr",

+ "params": {

+ "img_size": 512,

+ "in_channels": 3,

+ "out_channels": 3,

+ "spatial_dims": 2

+ },

+ "pretrained":{

+ "enabled": false

+ }

+ },

+ "trainer": {

+ "name": "baseline",

+ "params": {

+ "num_epochs": 200,

+ "valid_frequency": 1,

+ "device": "cuda:0",

+ "algo_params": {}

+ }

+ },

+ "optimizer":{

+ "name": "adamw",

+ "params": {"lr": 5e-5}

+ },

+ "scheduler":{

+ "enabled": false

+ },

+ "seed": 19940817

+ },

+ "pred_setups":{

+ "input_path":"/home/gihun/data/CellSeg/Official/TuningSet",

+ "output_path": "./results/baseline",

+ "make_submission": true,

+ "exp_name": "baseline",

+ "algo_params": {}

+ },

+ "wandb_setups":{

+ "project": "CellSeg",

+ "group": "Baseline",

+ "name": "baseline"

+ }

+}

\ No newline at end of file

diff --git a/annolid/segmentation/MEDIAR/config/mediar_example.json b/annolid/segmentation/MEDIAR/config/mediar_example.json

new file mode 100644

index 00000000..11c99495

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/config/mediar_example.json

@@ -0,0 +1,72 @@

+{

+ "data_setups":{

+ "labeled":{

+ "root": "/home/gihun/data/CellSeg/",

+ "mapping_file": "./train_tools/data_utils/mapping_labeled.json",

+ "amplified": false,

+ "batch_size": 8,

+ "valid_portion": 0.1

+ },

+ "public":{

+ "enabled": true,

+ "params":{

+ "root": "/home/gihun/data/CellSeg/",

+ "mapping_file": "./train_tools/data_utils/mapping_public.json",

+ "batch_size": 1

+ }

+ },

+ "unlabeled":{

+ "enabled": false

+ }

+ },

+ "train_setups":{

+ "model":{

+ "name": "mediar-former",

+ "params": {

+ "encoder_name": "mit_b5",

+ "encoder_weights": "imagenet",

+ "decoder_channels": [1024, 512, 256, 128, 64],

+ "decoder_pab_channels": 256,

+ "in_channels": 3,

+ "classes": 3

+ },

+ "pretrained":{

+ "enabled": false,

+ "weights": "./weights/pretrained/phase2.pth",

+ "strict": false

+ }

+ },

+ "trainer": {

+ "name": "mediar",

+ "params": {

+ "num_epochs": 200,

+ "valid_frequency": 1,

+ "device": "cuda:0",

+ "amp": true,

+ "algo_params": {"with_public": false}

+ }

+ },

+ "optimizer":{

+ "name": "adamw",

+ "params": {"lr": 5e-5}

+ },

+ "scheduler":{

+ "enabled": true,

+ "name": "cosine",

+ "params": {"T_max": 100, "eta_min": 1e-7}

+ },

+ "seed": 19940817

+ },

+ "pred_setups":{

+ "input_path":"/home/gihun/data/CellSeg/Official/TuningSet",

+ "output_path": "./mediar_example",

+ "make_submission": true,

+ "exp_name": "mediar_example",

+ "algo_params": {"use_tta": false}

+ },

+ "wandb_setups":{

+ "project": "CellSeg",

+ "group": "MEDIAR",

+ "name": "mediar_example"

+ }

+}

\ No newline at end of file

diff --git a/annolid/segmentation/MEDIAR/config/pred/pred_mediar.json b/annolid/segmentation/MEDIAR/config/pred/pred_mediar.json

new file mode 100644

index 00000000..65493771

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/config/pred/pred_mediar.json

@@ -0,0 +1,17 @@

+{

+ "pred_setups":{

+ "name": "medair",

+ "input_path":"input_path",

+ "output_path": "./test",

+ "make_submission": true,

+ "model_path": "model_path",

+ "device": "cuda:0",

+ "model":{

+ "name": "mediar-former",

+ "params": {},

+ "pretrained":{

+ "enabled": false

+ }

+ }

+ }

+}

\ No newline at end of file

diff --git a/annolid/segmentation/MEDIAR/config/step1_pretraining/phase1.json b/annolid/segmentation/MEDIAR/config/step1_pretraining/phase1.json

new file mode 100644

index 00000000..a8baee70

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/config/step1_pretraining/phase1.json

@@ -0,0 +1,55 @@

+{

+ "data_setups":{

+ "labeled":{

+ "root": "/home/gihun/MEDIAR/",

+ "mapping_file": "./train_tools/data_utils/mapping_public.json",

+ "mapping_file_tuning": "/home/gihun/MEDIAR/train_tools/data_utils/mapping_tuning.json",

+ "batch_size": 9,

+ "valid_portion": 0

+ },

+ "public":{

+ "enabled": false,

+ "params":{}

+ }

+ },

+ "train_setups":{

+ "model":{

+ "name": "mediar-former",

+ "params": {},

+ "pretrained":{

+ "enabled": false

+ }

+ },

+ "trainer": {

+ "name": "mediar",

+ "params": {

+ "num_epochs": 80,

+ "valid_frequency": 10,

+ "device": "cuda:0",

+ "amp": true,

+ "algo_params": {"with_public": false}

+ }

+ },

+ "optimizer":{

+ "name": "adamw",

+ "ft_rate": 1.0,

+ "params": {"lr": 5e-5}

+ },

+ "scheduler":{

+ "enabled": true,

+ "name": "cosine",

+ "params": {"T_max": 80, "eta_min": 1e-6}

+ },

+ "seed": 19940817

+ },

+ "pred_setups":{

+ "input_path":"/home/gihun/MEDIAR/data/Official/Tuning/images",

+ "output_path": "./mediar_pretrained_phase1",

+ "make_submission": false

+ },

+ "wandb_setups":{

+ "project": "CellSeg",

+ "group": "Pretraining",

+ "name": "phase1"

+ }

+}

\ No newline at end of file

diff --git a/annolid/segmentation/MEDIAR/config/step1_pretraining/phase2.json b/annolid/segmentation/MEDIAR/config/step1_pretraining/phase2.json

new file mode 100644

index 00000000..b13e4bb4

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/config/step1_pretraining/phase2.json

@@ -0,0 +1,58 @@

+{

+ "data_setups":{

+ "labeled":{

+ "root": "/home/gihun/MEDIAR/",

+ "mapping_file": "./train_tools/data_utils/mapping_labeled.json",

+ "mapping_file_tuning": "/home/gihun/MEDIAR/train_tools/data_utils/mapping_tuning.json",

+ "join_mapping_file": "./train_tools/data_utils/mapping_public.json",

+ "batch_size": 9,

+ "valid_portion": 0

+ },

+ "unlabeled":{

+ "enabled": false

+ },

+ "public":{

+ "enabled": false

+ }

+ },

+ "train_setups":{

+ "model":{

+ "name": "mediar-former",

+ "params": {},

+ "pretrained":{

+ "enabled": false

+ }

+ },

+ "trainer": {

+ "name": "mediar",

+ "params": {

+ "num_epochs": 60,

+ "valid_frequency": 10,

+ "device": "cuda:0",

+ "amp": true,

+ "algo_params": {"with_public": false}

+ }

+ },

+ "optimizer":{

+ "name": "adamw",

+ "ft_rate": 1.0,

+ "params": {"lr": 5e-5}

+ },

+ "scheduler":{

+ "enabled": true,

+ "name": "cosine",

+ "params": {"T_max": 60, "eta_min": 1e-6}

+ },

+ "seed": 19940817

+ },

+ "pred_setups":{

+ "input_path":"/home/gihun/MEDIAR/data/Official/Tuning/images",

+ "output_path": "./mediar_pretrain_phase2",

+ "make_submission": false

+ },

+ "wandb_setups":{

+ "project": "CellSeg",

+ "group": "Pretraining",

+ "name": "phase2"

+ }

+}

\ No newline at end of file

diff --git a/annolid/segmentation/MEDIAR/config/step2_finetuning/finetuning1.json b/annolid/segmentation/MEDIAR/config/step2_finetuning/finetuning1.json

new file mode 100644

index 00000000..dbdea432

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/config/step2_finetuning/finetuning1.json

@@ -0,0 +1,66 @@

+{

+ "data_setups":{

+ "labeled":{

+ "root": "/home/gihun/MEDIAR/",

+ "mapping_file": "./train_tools/data_utils/mapping_labeled.json",

+ "mapping_file_tuning": "/home/gihun/MEDIAR/train_tools/data_utils/mapping_tuning.json",

+ "amplified": true,

+ "batch_size": 8,

+ "valid_portion": 0.0

+ },

+ "public":{

+ "enabled": false,

+ "params":{

+ "root": "/home/gihun/MEDIAR/",

+ "mapping_file": "./train_tools/data_utils/mapping_public.json",

+ "batch_size": 1

+ }

+ },

+ "unlabeled":{

+ "enabled": false

+ }

+ },

+ "train_setups":{

+ "model":{

+ "name": "mediar-former",

+ "params": {},

+ "pretrained":{

+ "enabled": true,

+ "weights": "./weights/pretrained/phase1.pth",

+ "strict": false

+ }

+ },

+ "trainer": {

+ "name": "mediar",

+ "params": {

+ "num_epochs": 200,

+ "valid_frequency": 1,

+ "device": "cuda:7",

+ "amp": true,

+ "algo_params": {"with_public": false}

+ }

+ },

+ "optimizer":{

+ "name": "adamw",

+ "params": {"lr": 2e-5}

+ },

+ "scheduler":{

+ "enabled": true,

+ "name": "cosine",

+ "params": {"T_max": 100, "eta_min": 1e-7}

+ },

+ "seed": 19940817

+ },

+ "pred_setups":{

+ "input_path":"/home/gihun/MEDIAR/data/Official/Tuning/images",

+ "output_path": "./results/",

+ "make_submission": true,

+ "exp_name": "mediar_from_phase1",

+ "algo_params": {"use_tta": false}

+ },

+ "wandb_setups":{

+ "project": "CellSeg",

+ "group": "Fine-tuning",

+ "name": "from_phase1"

+ }

+}

\ No newline at end of file

diff --git a/annolid/segmentation/MEDIAR/config/step2_finetuning/finetuning2.json b/annolid/segmentation/MEDIAR/config/step2_finetuning/finetuning2.json

new file mode 100644

index 00000000..149a0f5d

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/config/step2_finetuning/finetuning2.json

@@ -0,0 +1,66 @@

+{

+ "data_setups":{

+ "labeled":{

+ "root": "/home/gihun/MEDIAR/",

+ "mapping_file": "./train_tools/data_utils/mapping_labeled.json",

+ "mapping_file_tuning": "/home/gihun/MEDIAR/train_tools/data_utils/mapping_tuning.json",

+ "amplified": true,

+ "batch_size": 8,

+ "valid_portion": 0.0

+ },

+ "public":{

+ "enabled": true,

+ "params":{

+ "root": "/home/gihun/MEDIAR/",

+ "mapping_file": "./train_tools/data_utils/mapping_public.json",

+ "batch_size": 1

+ }

+ },

+ "unlabeled":{

+ "enabled": false

+ }

+ },

+ "train_setups":{

+ "model":{

+ "name": "mediar-former",

+ "params": {},

+ "pretrained":{

+ "enabled": true,

+ "weights": "./weights/pretrained/phase2.pth",

+ "strict": false

+ }

+ },

+ "trainer": {

+ "name": "mediar",

+ "params": {

+ "num_epochs": 50,

+ "valid_frequency": 1,

+ "device": "cuda:0",

+ "amp": true,

+ "algo_params": {"with_public": true}

+ }

+ },

+ "optimizer":{

+ "name": "adamw",

+ "params": {"lr": 2e-5}

+ },

+ "scheduler":{

+ "enabled": true,

+ "name": "cosine",

+ "params": {"T_max": 100, "eta_min": 1e-7}

+ },

+ "seed": 19940817

+ },

+ "pred_setups":{

+ "input_path":"/home/gihun/MEDIAR/data/Official/Tuning/images",

+ "output_path": "./results/from_phase2",

+ "make_submission": true,

+ "exp_name": "mediar_from_phase2",

+ "algo_params": {"use_tta": false}

+ },

+ "wandb_setups":{

+ "project": "CellSeg",

+ "group": "Fine-tuning",

+ "name": "from_phase2"

+ }

+}

\ No newline at end of file

diff --git a/annolid/segmentation/MEDIAR/config/step3_prediction/base_prediction.json b/annolid/segmentation/MEDIAR/config/step3_prediction/base_prediction.json

new file mode 100644

index 00000000..e253daea

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/config/step3_prediction/base_prediction.json

@@ -0,0 +1,16 @@

+{

+ "pred_setups":{

+ "name": "mediar",

+ "input_path":"/home/gihun/MEDIAR/data/Official/Tuning/images",

+ "output_path": "./results/mediar_base_prediction",

+ "make_submission": true,

+ "model_path": "./weights/finetuned/from_phase1.pth",

+ "device": "cuda:7",

+ "model":{

+ "name": "mediar-former",

+ "params": {}

+ },

+ "exp_name": "mediar_p1_base",

+ "algo_params": {"use_tta": false}

+ }

+}

\ No newline at end of file

diff --git a/annolid/segmentation/MEDIAR/config/step3_prediction/ensemble_tta.json b/annolid/segmentation/MEDIAR/config/step3_prediction/ensemble_tta.json

new file mode 100644

index 00000000..4d3910b6

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/config/step3_prediction/ensemble_tta.json

@@ -0,0 +1,23 @@

+{

+ "pred_setups":{

+ "name": "ensemble_mediar",

+ "input_path":"/home/gihun/MEDIAR/data/Official/Tuning/images",

+ "output_path": "./results/mediar_ensemble_tta",

+ "make_submission": true,

+ "model_path1": "./weights/finetuned/from_phase1.pth",

+ "model_path2": "./weights/finetuned/from_phase2.pth",

+ "device": "cuda:0",

+ "model":{

+ "name": "mediar-former",

+ "params": {

+ "encoder_name":"mit_b5",

+ "decoder_channels": [1024, 512, 256, 128, 64],

+ "decoder_pab_channels": 256,

+ "in_channels":3,

+ "classes":3

+ }

+ },

+ "exp_name": "mediar_ensemble_tta",

+ "algo_params": {"use_tta": true}

+ }

+}

\ No newline at end of file

diff --git a/annolid/segmentation/MEDIAR/core/BasePredictor.py b/annolid/segmentation/MEDIAR/core/BasePredictor.py

new file mode 100644

index 00000000..c1c9753d

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/core/BasePredictor.py

@@ -0,0 +1,126 @@

+import torch

+import numpy as np

+import time

+import os

+import tifffile as tif

+

+from datetime import datetime

+from zipfile import ZipFile

+from pytz import timezone

+from annolid.segmentation.MEDIAR.train_tools.data_utils.transforms import get_pred_transforms

+

+

+class BasePredictor:

+ def __init__(

+ self,

+ model,

+ device,

+ input_path,

+ output_path,

+ make_submission=False,

+ exp_name=None,

+ algo_params=None,

+ ):

+ self.model = model

+ self.device = device

+ self.input_path = input_path

+ self.output_path = output_path

+ self.make_submission = make_submission

+ self.exp_name = exp_name

+

+ # Assign algoritm-specific arguments

+ if algo_params:

+ self.__dict__.update((k, v) for k, v in algo_params.items())

+

+ # Prepare inference environments

+ self._setups()

+

+ @torch.no_grad()

+ def conduct_prediction(self):

+ self.model.to(self.device)

+ self.model.eval()

+ total_time = 0

+ total_times = []

+

+ for img_name in self.img_names:

+ img_data = self._get_img_data(img_name)

+ img_data = img_data.to(self.device)

+

+ start = time.time()

+

+ pred_mask = self._inference(img_data)

+ pred_mask = self._post_process(pred_mask.squeeze(0).cpu().numpy())

+

+ self.write_pred_mask(

+ pred_mask, self.output_path, img_name, self.make_submission

+ )

+ self.save_prediction(

+ pred_mask, image_name=img_name)

+ end = time.time()

+

+ time_cost = end - start

+ total_times.append(time_cost)

+ total_time += time_cost

+ print(

+ f"Prediction finished: {img_name}; img size = {img_data.shape}; costing: {time_cost:.2f}s"

+ )

+

+ print(f"\n Total Time Cost: {total_time:.2f}s")

+

+ if self.make_submission:

+ fname = "%s.zip" % self.exp_name

+

+ os.makedirs("./submissions", exist_ok=True)

+ submission_path = os.path.join("./submissions", fname)

+

+ with ZipFile(submission_path, "w") as zipObj2:

+ pred_names = sorted(os.listdir(self.output_path))

+ for pred_name in pred_names:

+ pred_path = os.path.join(self.output_path, pred_name)

+ zipObj2.write(pred_path)

+

+ print("\n>>>>> Submission file is saved at: %s\n" % submission_path)

+

+ return time_cost

+

+ def write_pred_mask(self, pred_mask,

+ output_dir,

+ image_name,

+ submission=False):

+

+ # All images should contain at least 5 cells

+ if submission:

+ if not (np.max(pred_mask) > 5):

+ print("[!Caution] Only %d Cells Detected!!!\n" %

+ np.max(pred_mask))

+

+ file_name = image_name.split(".")[0]

+ file_name = file_name + "_label.tiff"

+ file_path = os.path.join(output_dir, file_name)

+

+ tif.imwrite(file_path, pred_mask, compression="zlib")

+

+ def _setups(self):

+ self.pred_transforms = get_pred_transforms()

+ os.makedirs(self.output_path, exist_ok=True)

+

+ now = datetime.now(timezone("Asia/Seoul"))

+ dt_string = now.strftime("%m%d_%H%M")

+ self.exp_name = (

+ self.exp_name + dt_string if self.exp_name is not None else dt_string

+ )

+

+ self.img_names = sorted(os.listdir(self.input_path))

+

+ def _get_img_data(self, img_name):

+ img_path = os.path.join(self.input_path, img_name)

+ img_data = self.pred_transforms(img_path)

+ img_data = img_data.unsqueeze(0)

+

+ return img_data

+

+ def _inference(self, img_data):

+ raise NotImplementedError

+

+ def _post_process(self, pred_mask):

+ raise NotImplementedError

diff --git a/annolid/segmentation/MEDIAR/core/BaseTrainer.py b/annolid/segmentation/MEDIAR/core/BaseTrainer.py

new file mode 100644

index 00000000..102f1b4e

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/core/BaseTrainer.py

@@ -0,0 +1,240 @@

+import torch

+import numpy as np

+from tqdm import tqdm

+from monai.inferers import sliding_window_inference

+from monai.metrics import CumulativeAverage

+from monai.transforms import (

+ Activations,

+ AsDiscrete,

+ Compose,

+ EnsureType,

+)

+

+import os, sys

+import copy

+

+sys.path.insert(0, os.path.abspath(os.path.join(os.getcwd(), "../")))

+

+from annolid.segmentation.MEDIAR.core.utils import print_learning_device, print_with_logging

+from annolid.segmentation.MEDIAR.train_tools.measures import evaluate_f1_score_cellseg

+

+

+class BaseTrainer:

+ """Abstract base class for trainer implementations"""

+

+ def __init__(

+ self,

+ model,

+ dataloaders,

+ optimizer,

+ scheduler=None,

+ criterion=None,

+ num_epochs=100,

+ device="cuda:0",

+ no_valid=False,

+ valid_frequency=1,

+ amp=False,

+ algo_params=None,

+ ):

+ self.model = model.to(device)

+ self.dataloaders = dataloaders

+ self.optimizer = optimizer

+ self.scheduler = scheduler

+ self.criterion = criterion

+ self.num_epochs = num_epochs

+ self.no_valid = no_valid

+ self.valid_frequency = valid_frequency

+ self.device = device

+ self.amp = amp

+ self.best_weights = None

+ self.best_f1_score = 0.1

+

+ # FP-16 Scaler

+ self.scaler = torch.cuda.amp.GradScaler() if amp else None

+

+ # Assign algoritm-specific arguments

+ if algo_params:

+ self.__dict__.update((k, v) for k, v in algo_params.items())

+

+ # Cumulitive statistics

+ self.loss_metric = CumulativeAverage()

+ self.f1_metric = CumulativeAverage()

+

+ # Post-processing functions

+ self.post_pred = Compose(

+ [EnsureType(), Activations(softmax=True), AsDiscrete(threshold=0.5)]

+ )

+ self.post_gt = Compose([EnsureType(), AsDiscrete(to_onehot=None)])

+

+ def train(self):

+ """Train the model"""

+

+ # Print learning device name

+ print_learning_device(self.device)

+

+ # Learning process

+ for epoch in range(1, self.num_epochs + 1):

+ print(f"[Round {epoch}/{self.num_epochs}]")

+

+ # Train Epoch Phase

+ print(">>> Train Epoch")

+ train_results = self._epoch_phase("train")

+ print_with_logging(train_results, epoch)

+

+ if self.scheduler is not None:

+ self.scheduler.step()

+

+ if epoch % self.valid_frequency == 0:

+ if not self.no_valid:

+ # Valid Epoch Phase

+ print(">>> Valid Epoch")

+ valid_results = self._epoch_phase("valid")

+ print_with_logging(valid_results, epoch)

+

+ if "Valid_F1_Score" in valid_results.keys():

+ current_f1_score = valid_results["Valid_F1_Score"]

+ self._update_best_model(current_f1_score)

+ else:

+ print(">>> TuningSet Epoch")

+ tuning_cell_counts = self._tuningset_evaluation()

+ tuning_count_dict = {"TuningSet_Cell_Count": tuning_cell_counts}

+ print_with_logging(tuning_count_dict, epoch)

+

+ current_cell_count = tuning_cell_counts

+ self._update_best_model(current_cell_count)

+

+ print("-" * 50)

+

+ self.best_f1_score = 0

+

+ if self.best_weights is not None:

+ self.model.load_state_dict(self.best_weights)

+

+ def _epoch_phase(self, phase):

+ """Learning process for 1 Epoch (for different phases).

+

+ Args:

+ phase (str): "train", "valid", "test"

+

+ Returns:

+ dict: statistics for the phase results

+ """

+ phase_results = {}

+

+ # Set model mode

+ self.model.train() if phase == "train" else self.model.eval()

+

+ # Epoch process

+ for batch_data in tqdm(self.dataloaders[phase]):

+ images = batch_data["img"].to(self.device)

+ labels = batch_data["label"].to(self.device)

+ self.optimizer.zero_grad()

+

+ # Forward pass

+ with torch.set_grad_enabled(phase == "train"):

+ outputs = self.model(images)

+ loss = self.criterion(outputs, labels)

+ self.loss_metric.append(loss)

+

+ # Backward pass

+ if phase == "train":

+ loss.backward()

+ self.optimizer.step()

+

+ # Update metrics

+ phase_results = self._update_results(

+ phase_results, self.loss_metric, "loss", phase

+ )

+

+ return phase_results

+

+ @torch.no_grad()

+ def _tuningset_evaluation(self):

+ cell_counts_total = []

+ self.model.eval()

+

+ for batch_data in tqdm(self.dataloaders["tuning"]):

+ images = batch_data["img"].to(self.device)

+ if images.shape[-1] > 5000:

+ continue

+

+ outputs = sliding_window_inference(

+ images,

+ roi_size=512,

+ sw_batch_size=4,

+ predictor=self.model,

+ padding_mode="constant",

+ mode="gaussian",

+ )

+

+ outputs = outputs.squeeze(0)

+ outputs, _ = self._post_process(outputs, None)

+ count = len(np.unique(outputs) - 1)

+ cell_counts_total.append(count)

+

+ cell_counts_total_sum = np.sum(cell_counts_total)

+ print("Cell Counts Total: (%d)" % (cell_counts_total_sum))

+

+ return cell_counts_total_sum

+

+ def _update_results(self, phase_results, metric, metric_key, phase="train"):

+ """Aggregate and flush metrics

+

+ Args:

+ phase_results (dict): base dictionary to log metrics

+ metric (_type_): cumulated metrics

+ metric_key (_type_): name of metric

+ phase (str, optional): current phase name. Defaults to "train".

+

+ Returns:

+ dict: dictionary of metrics for the current phase

+ """

+

+ # Refine metrics name

+ metric_key = "_".join([phase, metric_key]).title()

+

+ # Aggregate metrics

+ metric_item = round(metric.aggregate().item(), 4)

+

+ # Log metrics to dictionary

+ phase_results[metric_key] = metric_item

+

+ # Flush metrics

+ metric.reset()

+

+ return phase_results

+

+ def _update_best_model(self, current_f1_score):

+ if current_f1_score > self.best_f1_score:

+ self.best_weights = copy.deepcopy(self.model.state_dict())

+ self.best_f1_score = current_f1_score

+ print(

+ "\n>>>> Update Best Model with score: {}\n".format(self.best_f1_score)

+ )

+ else:

+ pass

+

+ def _inference(self, images, phase="train"):

+ """inference methods for different phase"""

+ if phase != "train":

+ outputs = sliding_window_inference(

+ images,

+ roi_size=512,

+ sw_batch_size=4,

+ predictor=self.model,

+ padding_mode="reflect",

+ mode="gaussian",

+ overlap=0.5,

+ )

+ else:

+ outputs = self.model(images)

+

+ return outputs

+

+ def _post_process(self, outputs, labels):

+ return outputs, labels

+

+ def _get_f1_metric(self, masks_pred, masks_true):

+ f1_score = evaluate_f1_score_cellseg(masks_true, masks_pred)[-1]

+

+ return f1_score

diff --git a/annolid/segmentation/MEDIAR/core/Baseline/Predictor.py b/annolid/segmentation/MEDIAR/core/Baseline/Predictor.py

new file mode 100644

index 00000000..b4036f40

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/core/Baseline/Predictor.py

@@ -0,0 +1,59 @@

+import torch

+import os, sys

+from skimage import morphology, measure

+from monai.inferers import sliding_window_inference

+

+sys.path.insert(0, os.path.abspath(os.path.join(os.getcwd(), "../../")))

+

+from annolid.segmentation.MEDIAR.core.BasePredictor import BasePredictor

+

+__all__ = ["Predictor"]

+

+

+class Predictor(BasePredictor):

+ def __init__(

+ self,

+ model,

+ device,

+ input_path,

+ output_path,

+ make_submission=False,

+ exp_name=None,

+ algo_params=None,

+ ):

+ super(Predictor, self).__init__(

+ model,

+ device,

+ input_path,

+ output_path,

+ make_submission,

+ exp_name,

+ algo_params,

+ )

+

+ def _inference(self, img_data):

+ pred_mask = sliding_window_inference(

+ img_data,

+ 512,

+ 4,

+ self.model,

+ padding_mode="constant",

+ mode="gaussian",

+ overlap=0.6,

+ )

+

+ return pred_mask

+

+ def _post_process(self, pred_mask):

+ # Get probability map from the predicted logits

+ pred_mask = torch.from_numpy(pred_mask)

+ pred_mask = torch.softmax(pred_mask, dim=0)

+ pred_mask = pred_mask[1].cpu().numpy()

+

+ # Apply morphological post-processing

+ pred_mask = pred_mask > 0.5

+ pred_mask = morphology.remove_small_holes(pred_mask, connectivity=1)

+ pred_mask = morphology.remove_small_objects(pred_mask, 16)

+ pred_mask = measure.label(pred_mask)

+

+ return pred_mask

diff --git a/annolid/segmentation/MEDIAR/core/Baseline/Trainer.py b/annolid/segmentation/MEDIAR/core/Baseline/Trainer.py

new file mode 100644

index 00000000..1c4585e1

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/core/Baseline/Trainer.py

@@ -0,0 +1,115 @@

+from tqdm import tqdm

+from annolid.segmentation.MEDIAR.train_tools.measures import evaluate_f1_score_cellseg

+from annolid.segmentation.MEDIAR.core.Baseline.utils import create_interior_onehot, identify_instances_from_classmap

+from annolid.segmentation.MEDIAR.core.BaseTrainer import BaseTrainer

+import torch

+import os

+import sys

+import monai

+

+from monai.data import decollate_batch

+

+sys.path.insert(0, os.path.abspath(os.path.join(os.getcwd(), "../../")))

+

+

+__all__ = ["Trainer"]

+

+

+class Trainer(BaseTrainer):

+ def __init__(

+ self,

+ model,

+ dataloaders,

+ optimizer,

+ scheduler=None,

+ criterion=None,

+ num_epochs=100,

+ device="cuda:0",

+ no_valid=False,

+ valid_frequency=1,

+ amp=False,

+ algo_params=None,

+ ):

+ super(Trainer, self).__init__(

+ model,

+ dataloaders,

+ optimizer,

+ scheduler,

+ criterion,

+ num_epochs,

+ device,

+ no_valid,

+ valid_frequency,

+ amp,

+ algo_params,

+ )

+

+ # Dice loss as segmentation criterion

+ self.criterion = monai.losses.DiceCELoss(softmax=True)

+

+ def _epoch_phase(self, phase):

+ """Learning process for 1 Epoch."""

+

+ phase_results = {}

+

+ # Set model mode

+ self.model.train() if phase == "train" else self.model.eval()

+

+ # Epoch process

+ for batch_data in tqdm(self.dataloaders[phase]):

+ images = batch_data["img"].to(self.device)

+ labels = batch_data["label"].to(self.device)

+ self.optimizer.zero_grad()

+

+ # Map label masks to 3-class onehot map

+ labels_onehot = create_interior_onehot(labels)

+

+ # Forward pass

+ with torch.set_grad_enabled(phase == "train"):

+ outputs = self._inference(images, phase)

+ loss = self.criterion(outputs, labels_onehot)

+ self.loss_metric.append(loss)

+

+ if phase != "train":

+ f1_score = self._get_f1_metric(outputs, labels)

+ self.f1_metric.append(f1_score)

+

+ # Backward pass

+ if phase == "train":

+ # For the mixed precision training

+ if self.amp:

+ self.scaler.scale(loss).backward()

+ self.scaler.unscale_(self.optimizer)

+ self.scaler.step(self.optimizer)

+ self.scaler.update()

+

+ else:

+ loss.backward()

+ self.optimizer.step()

+

+ # Update metrics

+ phase_results = self._update_results(

+ phase_results, self.loss_metric, "loss", phase

+ )

+

+ if phase != "train":

+ phase_results = self._update_results(

+ phase_results, self.f1_metric, "f1_score", phase

+ )

+

+ return phase_results

+

+ def _post_process(self, outputs, labels_onehot):

+ """Conduct post-processing for outputs & labels."""

+ outputs = [self.post_pred(i) for i in decollate_batch(outputs)]

+ labels_onehot = [self.post_gt(i)

+ for i in decollate_batch(labels_onehot)]

+

+ return outputs, labels_onehot

+

+ def _get_f1_metric(self, masks_pred, masks_true):

+ masks_pred = identify_instances_from_classmap(masks_pred[0])

+ masks_true = masks_true.squeeze(0).squeeze(0).cpu().numpy()

+ f1_score = evaluate_f1_score_cellseg(masks_true, masks_pred)[-1]

+

+ return f1_score

diff --git a/annolid/segmentation/MEDIAR/core/Baseline/__init__.py b/annolid/segmentation/MEDIAR/core/Baseline/__init__.py

new file mode 100644

index 00000000..5d102375

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/core/Baseline/__init__.py

@@ -0,0 +1,2 @@

+from .Trainer import *

+from .Predictor import *

diff --git a/annolid/segmentation/MEDIAR/core/Baseline/utils.py b/annolid/segmentation/MEDIAR/core/Baseline/utils.py

new file mode 100644

index 00000000..4fd559de

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/core/Baseline/utils.py

@@ -0,0 +1,80 @@

+"""

+Adapted from the following references:

+[1] https://github.com/JunMa11/NeurIPS-CellSeg/blob/main/baseline/model_training_3class.py

+

+"""

+

+import torch

+import numpy as np

+from skimage import segmentation, morphology, measure

+import monai

+

+

+__all__ = ["create_interior_onehot", "identify_instances_from_classmap"]

+

+

+@torch.no_grad()

+def identify_instances_from_classmap(

+ class_map, cell_class=1, threshold=0.5, from_logits=True

+):

+ """Identification of cell instances from the class map"""

+

+ if from_logits:

+ class_map = torch.softmax(class_map, dim=0) # (C, H, W)

+

+ # Convert probability map to binary mask

+ pred_mask = class_map[cell_class].cpu().numpy()

+

+ # Apply morphological postprocessing

+ pred_mask = pred_mask > threshold

+ pred_mask = morphology.remove_small_holes(pred_mask, connectivity=1)

+ pred_mask = morphology.remove_small_objects(pred_mask, 16)

+ pred_mask = measure.label(pred_mask)

+

+ return pred_mask

+

+

+@torch.no_grad()

+def create_interior_onehot(inst_maps):

+ """

+ interior : (H,W), np.uint8

+ three-class map, values: 0,1,2

+ 0: background

+ 1: interior

+ 2: boundary

+ """

+ device = inst_maps.device

+

+ # Get (np.int16) array corresponding to label masks: (B, 1, H, W)

+ inst_maps = inst_maps.squeeze(1).cpu().numpy().astype(np.int16)

+

+ interior_maps = []

+

+ for inst_map in inst_maps:

+ # Create interior-edge map

+ boundary = segmentation.find_boundaries(inst_map, mode="inner")

+

+ # Refine interior-edge map

+ boundary = morphology.binary_dilation(boundary, morphology.disk(1))

+

+ # Assign label classes

+ interior_temp = np.logical_and(~boundary, inst_map > 0)

+

+ # interior_temp[boundary] = 0

+ interior_temp = morphology.remove_small_objects(interior_temp, min_size=16)

+ interior = np.zeros_like(inst_map, dtype=np.uint8)

+ interior[interior_temp] = 1

+ interior[boundary] = 2

+

+ interior_maps.append(interior)

+

+ # Aggregate interior_maps for batch

+ interior_maps = np.stack(interior_maps, axis=0).astype(np.uint8)

+

+ # Reshape as original label shape: (B, H, W)

+ interior_maps = torch.from_numpy(interior_maps).unsqueeze(1).to(device)

+

+ # Obtain one-hot map for batch

+ interior_onehot = monai.networks.one_hot(interior_maps, num_classes=3)

+

+ return interior_onehot

diff --git a/annolid/segmentation/MEDIAR/core/MEDIAR/EnsemblePredictor.py b/annolid/segmentation/MEDIAR/core/MEDIAR/EnsemblePredictor.py

new file mode 100644

index 00000000..e65685c7

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/core/MEDIAR/EnsemblePredictor.py

@@ -0,0 +1,105 @@

+import torch

+import os, sys, copy

+import numpy as np

+

+sys.path.insert(0, os.path.abspath(os.path.join(os.getcwd(), "../../")))

+

+from annolid.segmentation.MEDIAR.core.MEDIAR.Predictor import Predictor

+

+__all__ = ["EnsemblePredictor"]

+

+

+class EnsemblePredictor(Predictor):

+ def __init__(

+ self,

+ model,

+ model_aux,

+ device,

+ input_path,

+ output_path,

+ make_submission=False,

+ exp_name=None,

+ algo_params=None,

+ ):

+ super(EnsemblePredictor, self).__init__(

+ model,

+ device,

+ input_path,

+ output_path,

+ make_submission,

+ exp_name,

+ algo_params,

+ )

+ self.model_aux = model_aux

+

+ @torch.no_grad()

+ def _inference(self, img_data):

+

+ self.model_aux.to(self.device)

+ self.model_aux.eval()

+

+ img_data = img_data.to(self.device)

+ img_base = img_data

+

+ outputs_base = self._window_inference(img_base)

+ outputs_base = outputs_base.cpu().squeeze()

+

+ outputs_aux = self._window_inference(img_base, aux=True)

+ outputs_aux = outputs_aux.cpu().squeeze()

+ img_base.cpu()

+

+ if not self.use_tta:

+ pred_mask = (outputs_base + outputs_aux) / 2

+ return pred_mask

+

+ else:

+ # HorizontalFlip TTA

+ img_hflip = self.hflip_tta.apply_aug_image(img_data, apply=True)

+

+ outputs_hflip = self._window_inference(img_hflip)

+ outputs_hflip_aux = self._window_inference(img_hflip, aux=True)

+

+ outputs_hflip = self.hflip_tta.apply_deaug_mask(outputs_hflip, apply=True)

+ outputs_hflip_aux = self.hflip_tta.apply_deaug_mask(

+ outputs_hflip_aux, apply=True

+ )

+

+ outputs_hflip = outputs_hflip.cpu().squeeze()

+ outputs_hflip_aux = outputs_hflip_aux.cpu().squeeze()

+ img_hflip = img_hflip.cpu()

+

+ # VertricalFlip TTA

+ img_vflip = self.vflip_tta.apply_aug_image(img_data, apply=True)

+

+ outputs_vflip = self._window_inference(img_vflip)

+ outputs_vflip_aux = self._window_inference(img_vflip, aux=True)

+

+ outputs_vflip = self.vflip_tta.apply_deaug_mask(outputs_vflip, apply=True)

+ outputs_vflip_aux = self.vflip_tta.apply_deaug_mask(

+ outputs_vflip_aux, apply=True

+ )

+

+ outputs_vflip = outputs_vflip.cpu().squeeze()

+ outputs_vflip_aux = outputs_vflip_aux.cpu().squeeze()

+ img_vflip = img_vflip.cpu()

+

+ # Merge Results

+ pred_mask = torch.zeros_like(outputs_base)

+ pred_mask[0] = (outputs_base[0] + outputs_hflip[0] - outputs_vflip[0]) / 3

+ pred_mask[1] = (outputs_base[1] - outputs_hflip[1] + outputs_vflip[1]) / 3

+ pred_mask[2] = (outputs_base[2] + outputs_hflip[2] + outputs_vflip[2]) / 3

+

+ pred_mask_aux = torch.zeros_like(outputs_aux)

+ pred_mask_aux[0] = (

+ outputs_aux[0] + outputs_hflip_aux[0] - outputs_vflip_aux[0]

+ ) / 3

+ pred_mask_aux[1] = (

+ outputs_aux[1] - outputs_hflip_aux[1] + outputs_vflip_aux[1]

+ ) / 3

+ pred_mask_aux[2] = (

+ outputs_aux[2] + outputs_hflip_aux[2] + outputs_vflip_aux[2]

+ ) / 3

+

+ pred_mask = (pred_mask + pred_mask_aux) / 2

+

+ return pred_mask

diff --git a/annolid/segmentation/MEDIAR/core/MEDIAR/Predictor.py b/annolid/segmentation/MEDIAR/core/MEDIAR/Predictor.py

new file mode 100644

index 00000000..da3f12eb

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/core/MEDIAR/Predictor.py

@@ -0,0 +1,234 @@

+import torch

+import numpy as np

+import os, sys

+from monai.inferers import sliding_window_inference

+

+sys.path.insert(0, os.path.abspath(os.path.join(os.getcwd(), "../../")))

+

+from annolid.segmentation.MEDIAR.core.BasePredictor import BasePredictor

+from annolid.segmentation.MEDIAR.core.MEDIAR.utils import compute_masks

+

+__all__ = ["Predictor"]

+

+

+class Predictor(BasePredictor):

+ def __init__(

+ self,

+ model,

+ device,

+ input_path,

+ output_path,

+ make_submission=False,

+ exp_name=None,

+ algo_params=None,

+ ):

+ super(Predictor, self).__init__(

+ model,

+ device,

+ input_path,

+ output_path,

+ make_submission,

+ exp_name,

+ algo_params,

+ )

+ self.hflip_tta = HorizontalFlip()

+ self.vflip_tta = VerticalFlip()

+

+ @torch.no_grad()

+ def _inference(self, img_data):

+ """Conduct model prediction"""

+

+ img_data = img_data.to(self.device)

+ img_base = img_data

+ outputs_base = self._window_inference(img_base)

+ outputs_base = outputs_base.cpu().squeeze()

+ img_base.cpu()

+

+ if not self.use_tta:

+ pred_mask = outputs_base

+ return pred_mask

+

+ else:

+ # HorizontalFlip TTA

+ img_hflip = self.hflip_tta.apply_aug_image(img_data, apply=True)

+ outputs_hflip = self._window_inference(img_hflip)

+ outputs_hflip = self.hflip_tta.apply_deaug_mask(outputs_hflip, apply=True)

+ outputs_hflip = outputs_hflip.cpu().squeeze()

+ img_hflip = img_hflip.cpu()

+

+ # VertricalFlip TTA

+ img_vflip = self.vflip_tta.apply_aug_image(img_data, apply=True)

+ outputs_vflip = self._window_inference(img_vflip)

+ outputs_vflip = self.vflip_tta.apply_deaug_mask(outputs_vflip, apply=True)

+ outputs_vflip = outputs_vflip.cpu().squeeze()

+ img_vflip = img_vflip.cpu()

+

+ # Merge Results

+ pred_mask = torch.zeros_like(outputs_base)

+ pred_mask[0] = (outputs_base[0] + outputs_hflip[0] - outputs_vflip[0]) / 3

+ pred_mask[1] = (outputs_base[1] - outputs_hflip[1] + outputs_vflip[1]) / 3

+ pred_mask[2] = (outputs_base[2] + outputs_hflip[2] + outputs_vflip[2]) / 3

+

+ return pred_mask

+

+ def _window_inference(self, img_data, aux=False):

+ """Inference on RoI-sized window"""

+ outputs = sliding_window_inference(

+ img_data,

+ roi_size=512,

+ sw_batch_size=4,

+ predictor=self.model if not aux else self.model_aux,

+ padding_mode="constant",

+ mode="gaussian",

+ overlap=0.6,

+ )

+

+ return outputs

+

+ def _post_process(self, pred_mask):

+ """Generate cell instance masks."""

+ dP, cellprob = pred_mask[:2], self._sigmoid(pred_mask[-1])

+ H, W = pred_mask.shape[-2], pred_mask.shape[-1]

+

+ if np.prod(H * W) < (5000 * 5000):

+ pred_mask = compute_masks(

+ dP,

+ cellprob,

+ use_gpu=True,

+ flow_threshold=0.4,

+ device=self.device,

+ cellprob_threshold=0.5,

+ )[0]

+

+ else:

+ print("\n[Whole Slide] Grid Prediction starting...")

+ roi_size = 2000

+

+ # Get patch grid by roi_size

+ if H % roi_size != 0:

+ n_H = H // roi_size + 1

+ new_H = roi_size * n_H

+ else:

+ n_H = H // roi_size

+ new_H = H

+

+ if W % roi_size != 0:

+ n_W = W // roi_size + 1

+ new_W = roi_size * n_W

+ else:

+ n_W = W // roi_size

+ new_W = W

+

+ # Allocate values on the grid

+ pred_pad = np.zeros((new_H, new_W), dtype=np.uint32)

+ dP_pad = np.zeros((2, new_H, new_W), dtype=np.float32)

+ cellprob_pad = np.zeros((new_H, new_W), dtype=np.float32)

+

+ dP_pad[:, :H, :W], cellprob_pad[:H, :W] = dP, cellprob

+

+ for i in range(n_H):

+ for j in range(n_W):

+ print("Pred on Grid (%d, %d) processing..." % (i, j))

+ dP_roi = dP_pad[

+ :,

+ roi_size * i : roi_size * (i + 1),

+ roi_size * j : roi_size * (j + 1),

+ ]

+ cellprob_roi = cellprob_pad[

+ roi_size * i : roi_size * (i + 1),

+ roi_size * j : roi_size * (j + 1),

+ ]

+

+ pred_mask = compute_masks(

+ dP_roi,

+ cellprob_roi,

+ use_gpu=True,

+ flow_threshold=0.4,

+ device=self.device,

+ cellprob_threshold=0.5,

+ )[0]

+

+ pred_pad[

+ roi_size * i : roi_size * (i + 1),

+ roi_size * j : roi_size * (j + 1),

+ ] = pred_mask

+

+ pred_mask = pred_pad[:H, :W]

+

+ return pred_mask

+

+ def _sigmoid(self, z):

+ return 1 / (1 + np.exp(-z))

+

+

+"""

+Adapted from the following references:

+[1] https://github.com/qubvel/ttach/blob/master/ttach/transforms.py

+

+"""

+

+

+def hflip(x):

+ """flip batch of images horizontally"""

+ return x.flip(3)

+

+

+def vflip(x):

+ """flip batch of images vertically"""

+ return x.flip(2)

+

+

+class DualTransform:

+ identity_param = None

+

+ def __init__(

+ self, name: str, params,

+ ):

+ self.params = params

+ self.pname = name

+

+ def apply_aug_image(self, image, *args, **params):

+ raise NotImplementedError

+

+ def apply_deaug_mask(self, mask, *args, **params):

+ raise NotImplementedError

+

+

+class HorizontalFlip(DualTransform):

+ """Flip images horizontally (left -> right)"""

+

+ identity_param = False

+

+ def __init__(self):

+ super().__init__("apply", [False, True])

+

+ def apply_aug_image(self, image, apply=False, **kwargs):

+ if apply:

+ image = hflip(image)

+ return image

+

+ def apply_deaug_mask(self, mask, apply=False, **kwargs):

+ if apply:

+ mask = hflip(mask)

+ return mask

+

+

+class VerticalFlip(DualTransform):

+ """Flip images vertically (up -> down)"""

+

+ identity_param = False

+

+ def __init__(self):

+ super().__init__("apply", [False, True])

+

+ def apply_aug_image(self, image, apply=False, **kwargs):

+ if apply:

+ image = vflip(image)

+

+ return image

+

+ def apply_deaug_mask(self, mask, apply=False, **kwargs):

+ if apply:

+ mask = vflip(mask)

+

+ return mask

diff --git a/annolid/segmentation/MEDIAR/core/MEDIAR/Trainer.py b/annolid/segmentation/MEDIAR/core/MEDIAR/Trainer.py

new file mode 100644

index 00000000..4661f339

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/core/MEDIAR/Trainer.py

@@ -0,0 +1,172 @@

+import torch

+import torch.nn as nn

+import numpy as np

+import os, sys

+from tqdm import tqdm

+from monai.inferers import sliding_window_inference

+

+sys.path.insert(0, os.path.abspath(os.path.join(os.getcwd(), "../../")))

+

+from annolid.segmentation.MEDIAR.core.BaseTrainer import BaseTrainer

+from annolid.segmentation.MEDIAR.core.MEDIAR.utils import *

+

+__all__ = ["Trainer"]

+

+

+class Trainer(BaseTrainer):

+ def __init__(

+ self,

+ model,

+ dataloaders,

+ optimizer,

+ scheduler=None,

+ criterion=None,

+ num_epochs=100,

+ device="cuda:0",

+ no_valid=False,

+ valid_frequency=1,

+ amp=False,

+ algo_params=None,

+ ):

+ super(Trainer, self).__init__(

+ model,

+ dataloaders,

+ optimizer,

+ scheduler,

+ criterion,

+ num_epochs,

+ device,

+ no_valid,

+ valid_frequency,

+ amp,

+ algo_params,

+ )

+

+ self.mse_loss = nn.MSELoss(reduction="mean")

+ self.bce_loss = nn.BCEWithLogitsLoss(reduction="mean")

+

+ def mediar_criterion(self, outputs, labels_onehot_flows):

+ """loss function between true labels and prediction outputs"""

+

+ # Cell Recognition Loss

+ cellprob_loss = self.bce_loss(

+ outputs[:, -1],

+ torch.from_numpy(labels_onehot_flows[:, 1] > 0.5).to(self.device).float(),

+ )

+

+ # Cell Distinction Loss

+ gradient_flows = torch.from_numpy(labels_onehot_flows[:, 2:]).to(self.device)

+ gradflow_loss = 0.5 * self.mse_loss(outputs[:, :2], 5.0 * gradient_flows)

+

+ loss = cellprob_loss + gradflow_loss

+

+ return loss

+

+ def _epoch_phase(self, phase):

+ phase_results = {}

+

+ # Set model mode

+ self.model.train() if phase == "train" else self.model.eval()

+

+ # Epoch process

+ for batch_data in tqdm(self.dataloaders[phase]):

+ images, labels = batch_data["img"], batch_data["label"]

+

+ if self.with_public:

+ # Load batches sequentially from the unlabeled dataloader

+ try:

+ batch_data = next(self.public_iterator)

+ images_pub, labels_pub = batch_data["img"], batch_data["label"]

+

+ except:

+ # Assign memory loader if the cycle ends

+ self.public_iterator = iter(self.public_loader)

+ batch_data = next(self.public_iterator)

+ images_pub, labels_pub = batch_data["img"], batch_data["label"]

+

+ # Concat memory data to the batch

+ images = torch.cat([images, images_pub], dim=0)

+ labels = torch.cat([labels, labels_pub], dim=0)

+

+ images = images.to(self.device)

+ labels = labels.to(self.device)

+

+ self.optimizer.zero_grad()

+

+ # Forward pass

+ with torch.cuda.amp.autocast(enabled=self.amp):

+ with torch.set_grad_enabled(phase == "train"):

+ # Output shape is B x [grad y, grad x, cellprob] x H x W

+ outputs = self._inference(images, phase)

+

+ # Map label masks to graidnet and onehot

+ labels_onehot_flows = labels_to_flows(

+ labels, use_gpu=True, device=self.device

+ )

+ # Calculate loss

+ loss = self.mediar_criterion(outputs, labels_onehot_flows)

+ self.loss_metric.append(loss)

+

+ # Calculate valid statistics

+ if phase != "train":

+ outputs, labels = self._post_process(outputs, labels)

+ f1_score = self._get_f1_metric(outputs, labels)

+ self.f1_metric.append(f1_score)

+

+ # Backward pass

+ if phase == "train":

+ # For the mixed precision training

+ if self.amp:

+ self.scaler.scale(loss).backward()

+ self.scaler.unscale_(self.optimizer)

+ self.scaler.step(self.optimizer)

+ self.scaler.update()

+

+ else:

+ loss.backward()

+ self.optimizer.step()

+

+ # Update metrics

+ phase_results = self._update_results(

+ phase_results, self.loss_metric, "dice_loss", phase

+ )

+ if phase != "train":

+ phase_results = self._update_results(

+ phase_results, self.f1_metric, "f1_score", phase

+ )

+

+ return phase_results

+

+ def _inference(self, images, phase="train"):

+ """inference methods for different phase"""

+

+ if phase != "train":

+ outputs = sliding_window_inference(

+ images,

+ roi_size=512,

+ sw_batch_size=4,

+ predictor=self.model,

+ padding_mode="constant",

+ mode="gaussian",

+ overlap=0.5,

+ )

+ else:

+ outputs = self.model(images)

+

+ return outputs

+

+ def _post_process(self, outputs, labels=None):

+ """Predict cell instances using the gradient tracking"""

+ outputs = outputs.squeeze(0).cpu().numpy()

+ gradflows, cellprob = outputs[:2], self._sigmoid(outputs[-1])

+ outputs = compute_masks(gradflows, cellprob, use_gpu=True, device=self.device)

+ outputs = outputs[0] # (1, C, H, W) -> (C, H, W)

+

+ if labels is not None:

+ labels = labels.squeeze(0).squeeze(0).cpu().numpy()

+

+ return outputs, labels

+

+ def _sigmoid(self, z):

+ """Sigmoid function for numpy arrays"""

+ return 1 / (1 + np.exp(-z))

diff --git a/annolid/segmentation/MEDIAR/core/MEDIAR/__init__.py b/annolid/segmentation/MEDIAR/core/MEDIAR/__init__.py

new file mode 100644

index 00000000..80cc69b9

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/core/MEDIAR/__init__.py

@@ -0,0 +1,3 @@

+from .Trainer import *

+from .Predictor import *

+from .EnsemblePredictor import *

diff --git a/annolid/segmentation/MEDIAR/core/MEDIAR/utils.py b/annolid/segmentation/MEDIAR/core/MEDIAR/utils.py

new file mode 100644

index 00000000..555ea4e1

--- /dev/null

+++ b/annolid/segmentation/MEDIAR/core/MEDIAR/utils.py

@@ -0,0 +1,429 @@

+"""

+Copyright © 2022 Howard Hughes Medical Institute,

+Authored by Carsen Stringer and Marius Pachitariu.

+

+Redistribution and use in source and binary forms, with or without